Redesigning a digital identity verification platform

ROLE

Product Designer, UX Researcher

TIMELINE

Nov'22 - Nov'23

Identity verification sits at the critical intersection of security, compliance, and user experience. I recently led the modernization of an enterprise identity verification platform—which I'll call "AuthDoc" for this case study—that companies had long relied on to authenticate their users remotely and in-person.

While AuthDoc had served its core purpose, it had fallen behind modern user expectations and the organization's expanding business objectives. The company recognized this gap and took a step to launch their first-ever UX-driven initiative. As the sole UX researcher and designer on the project, I collaborated with cross-functional teams including product, development, QA, marketing and sales, to reimagine the platform from the ground up.

Note: This project is based on a real-world engagement under a non-disclosure agreement (NDA). All sensitive data and proprietary information has been omitted or anonymized in accordance with the NDA terms.

Goal

To redesign AuthDoc’s verification platform to improve task efficiency, user satisfaction, and accessibility for diverse user types, while ensuring full adoption by all legacy users and enabling a seamless, accurate, and fast verification process.

Outcome

92% adoption rate among legacy users

35% decrease in task completion time

Product Discovery

Stakeholder Interviews and Product Outcomes

Successful discovery starts with clear outcomes that guide the research and align it with both business goals and user needs. To set these outcomes, I interviewed 9 key stakeholders from various teams to get a complete picture of the organization’s objectives and challenges.

The main business priorities were customer retention and ensuring a smooth migration to the new version of the platform. During these conversations, we pinpointed the importance of keeping existing customers by addressing their frustrations and making the transition as smooth as possible.

Together, we mapped these business goals to product outcomes we could realistically achieve during the project. Some of them were:

Increased user retention

Enhanced ease of use, resulting in more users successfully completing key tasks like identity verification, database search, and troubleshooting.

Higher task success rates

Improved user satisfaction and loyalty, leading to a higher percentage of users continuing to use the platform after the migration to the new version.

Decreased error rates

Streamlined workflows that allow users to complete common tasks more quickly.

Reduced time on task

Fewer user errors during critical actions like identity verification and report lookup, resulting from better UI design, clearer instructions, and improved error handling.

Customer and User Segmentation

Given the B2B nature of the platform, the customers and end-users are distinct groups with different needs and objectives. Based on insights from the product team, we identified three main customer segments: Resellers, High Risk Buyers and High Performance Buyers.

Reseller

These customers offer the platform as part of a larger suite of services to their clients, making ease of integration and scalability key priorities.

Main jobs

Account and User management

Session management

Service user analysis

High Risk Buyer

Typically from industries like finance, law, and other high-risk sectors, these customers focus on minimizing risk without slowing down their operations.

Main jobs

Document scanning and verification

Accessing reports

Accessing Helpdesk

High Performance Buyer

These customers, which include governmental organizations and rental businesses, prioritize speed and efficiency.

Main jobs

Document scanning and verification

Accessing reports

Accessing Helpdesk

In addition to these customer segments, the legacy platform also supports three types of users:

Basic

Typically responsible for the actual document verification tasks, they engage with the platform regularly to complete their day-to-day workflows.

Extended Rights

These are managers or supervisors who oversee verification processes and ensure compliance. They have broader access to manage users and monitor activities.

Admins

Admins function as local support, managing the platform at an organizational level, resolving technical issues, and maintaining user accounts.

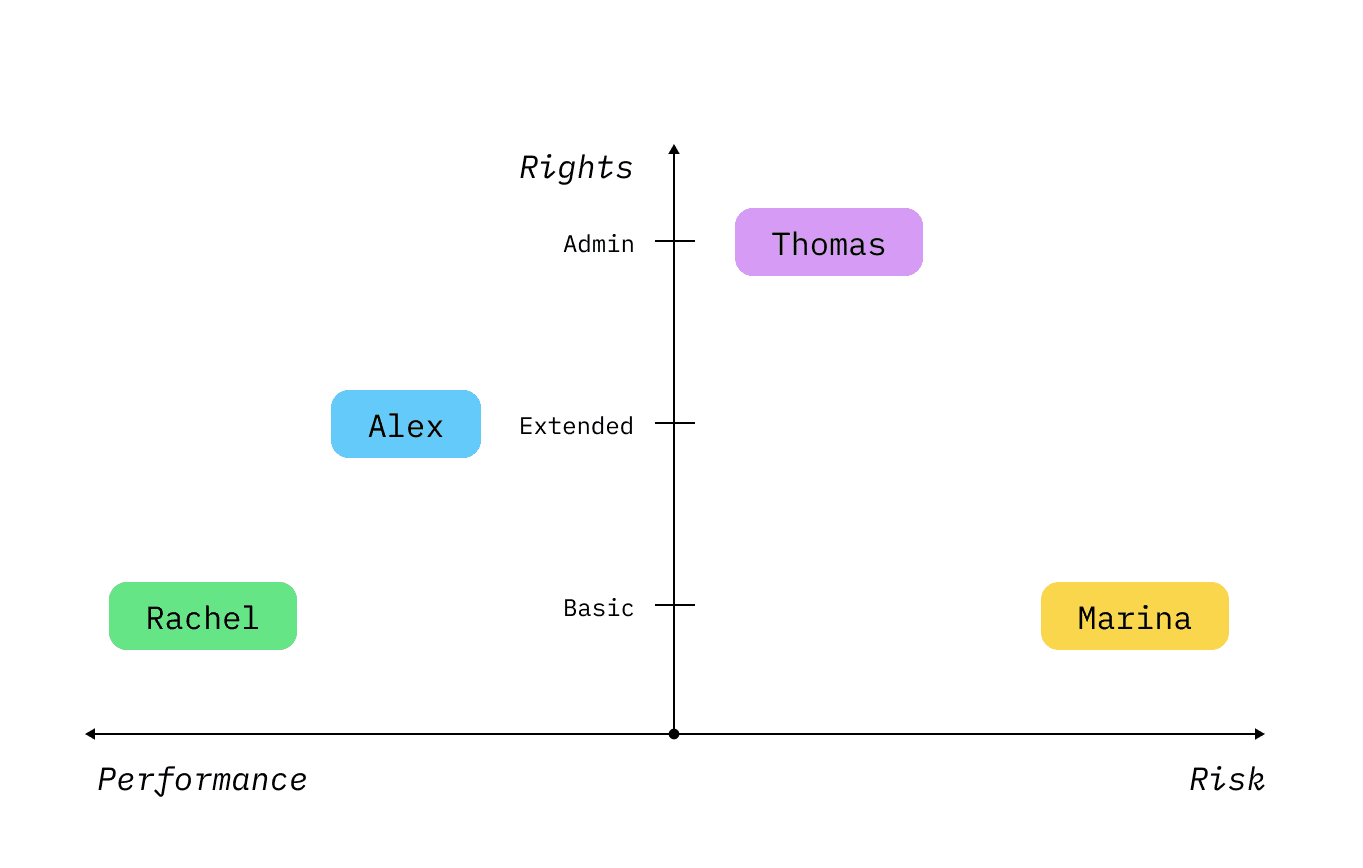

Based on the application analysis and stakeholder interviews I have created the following provisional personas: Alex (extended rights user + reseller), Marina (basic user + risk-averse buyer), Rachel (basic user + performance-oriented buyer) and Thomas (admin) that were up for validation during further research.

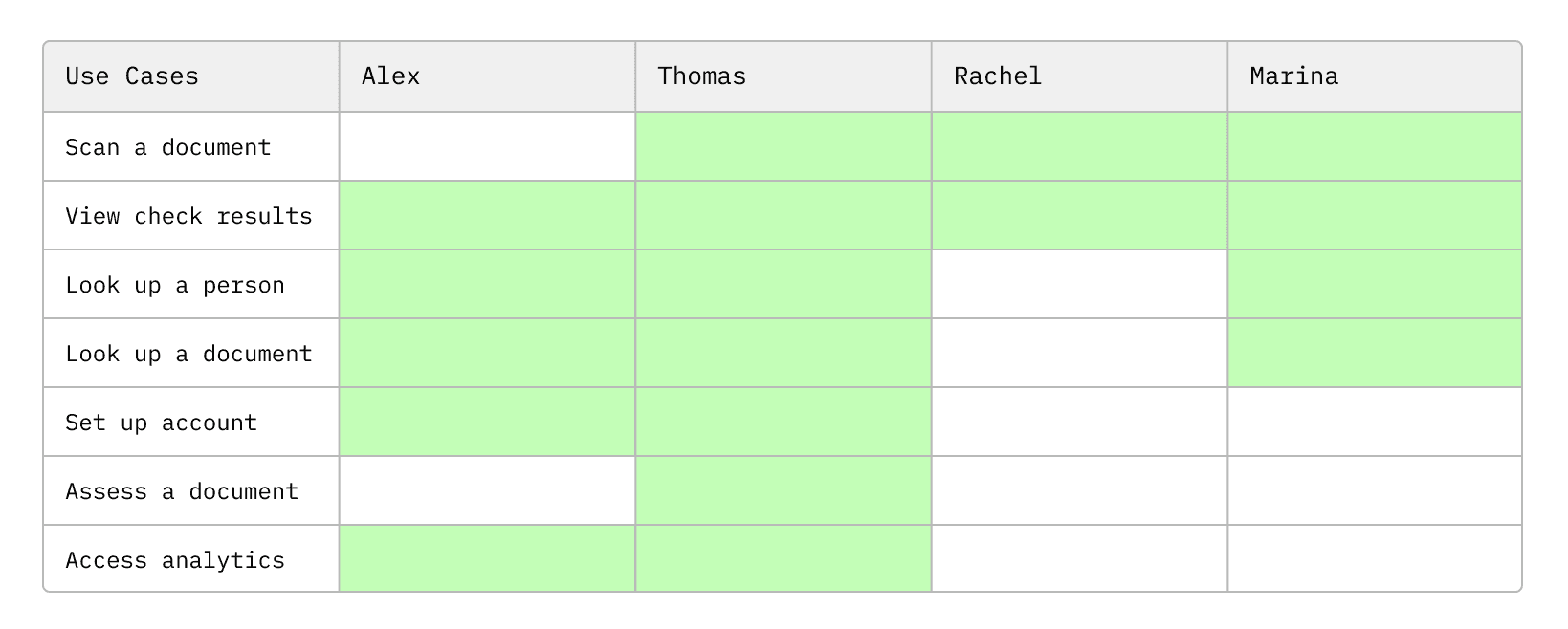

I mapped the provisional personas to various use cases across the platform to ensure a more targeted approach during user interviews. This mapping helped identify the specific tasks and workflows each persona encounters, enabling me to tailor interview questions to address the most relevant topics for each user group.

Research methodology

To conduct the foundational research, I used a mixed-method approach:

Heuristic Evaluation: I started by conducting a heuristic evaluation to quickly identify usability issues based on established design principles. This provided initial insights into the platform’s weaknesses.

JTBD User Interviews: Using the Jobs-to-Be-Done (JTBD) framework, I conducted qualitative user interviews to understand the underlying motivations behind user behaviors and the "jobs" they were hiring the platform to do. This helped uncover deeper user needs and pain points.

Moderated Usability Testing: Finally, I conducted moderated qualitative usability tests to assess task success rates completion times and user satisfaction. This established key benchmarks to guide the redesign and ensure improvements in efficiency, ease of use, and overall performance. While I initially intended to use tools like Hotjar for natural user observation, technical constraints led me to rely on live, moderated sessions. This approach still provided valuable real-time insights.

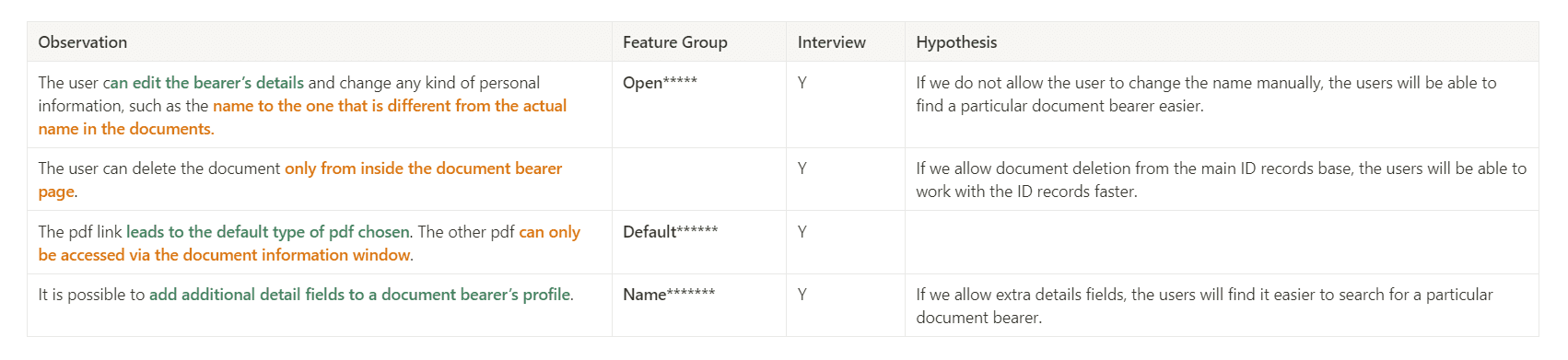

Excerpt from Heuristic Analysis Document

The findings from the heuristic evaluation played a crucial role in shaping the questions for user interviews. These insights highlighted key usability challenges and informed the areas that required deeper exploration with users.

JBTD Framework

I selected the Jobs-to-Be-Done (JBTD) framework for the research for several key reasons:

After collaborating with the product team to identify the main use cases for the AuthDoc platform, we recognized that these could be easily reframed as “jobs” that users are trying to accomplish.

The JBTD framework provided a goal-driven perspective, enabling me to focus on the users’ objectives. Notably, I found that multiple user groups often performed the same job, regardless of their role or segment.

By adopting this approach, the team and I gained clarity on the areas where the platform delivered value and where it fell short. This allowed us to sharpen our focus on what truly mattered to users, ensuring we prioritized the features and improvements that would have the greatest impact.

Continuous Discovery

As part of the initiative to introduce continuous discovery within the company, I incorporated a mechanism to build a research participant database. Everyone who received a screener questionnaire during this phase had the option to voluntarily provide their contact information for future research opportunities. Participants could also specify the types of research activities they were willing to engage in, such as in-depth interviews, usability testing, or surveys. This approach streamlined future recruitment for our ongoing research efforts.

Participants Recruitment and Screening

I initiated two parallel efforts for participant recruitment:

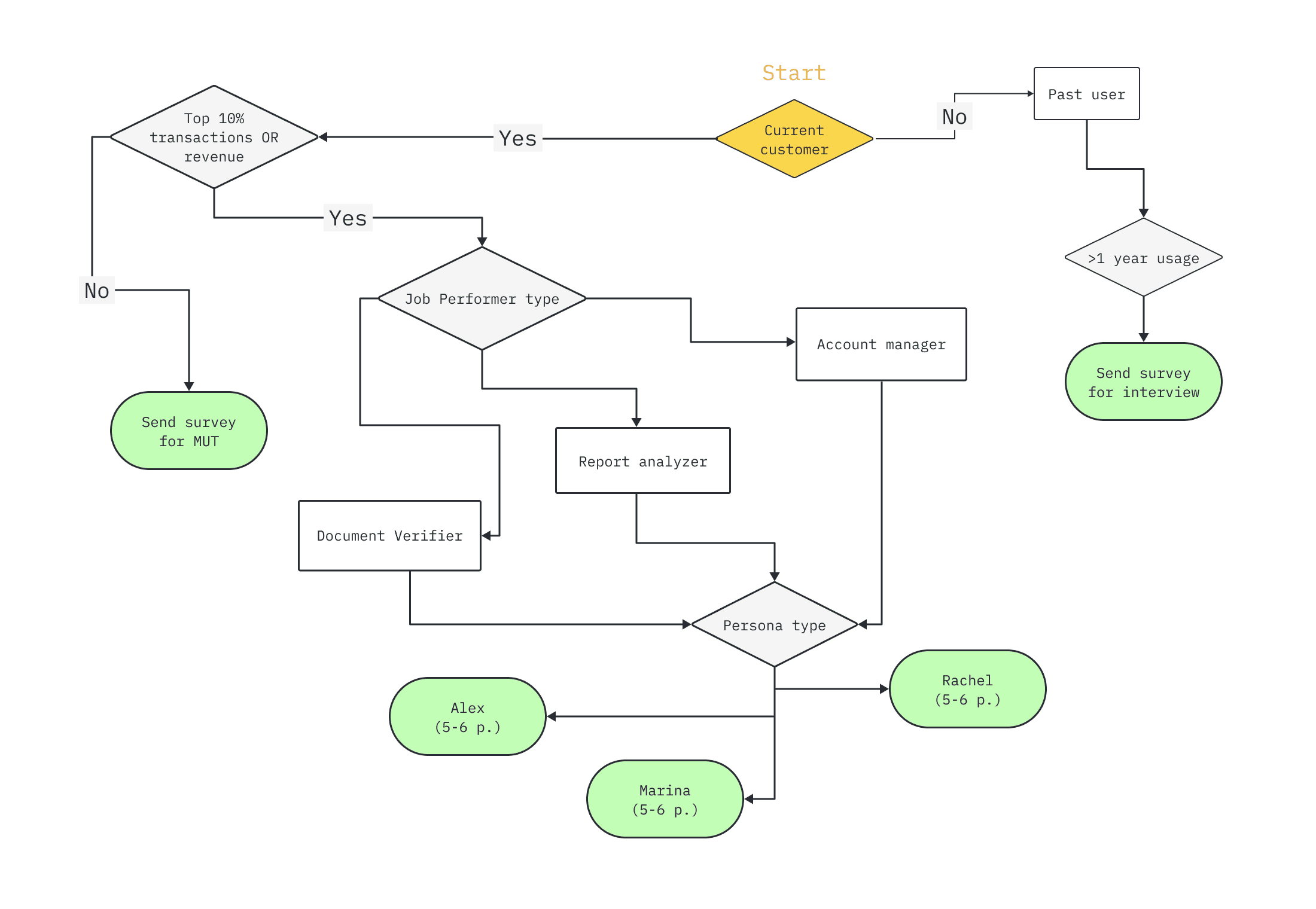

JBTD In-Depth Interviews: Recruitment was conducted through direct outreach by the Sales team. Using their personal connections with a select group of power users, the Sales team helped identify companies with frequent platform engagement. I developed a screening algorithm to select appropriate participants (see Figure below), and a screener questionnaire was sent out. This approach yielded a high response rate of 82%. Additionally, participants had the option to sign up for moderated usability testing sessions. I conducted 18 80-minute interviews in total (both in-person and remote) and applied questioning techniques, such as TEDW (Tell, Explain, Describe, Walk me through).

Moderated Usability Testing: Recruitment for these sessions was carried out via marketing channels. In collaboration with the Marketing team, we sent a screener questionnaire to a broader audience, targeting companies subscribed to the organization’s newsletter. This approach resulted in a response rate of approximately 25%. I conducted 24 45-minute moderated usability studies in total (both in-person and remote).

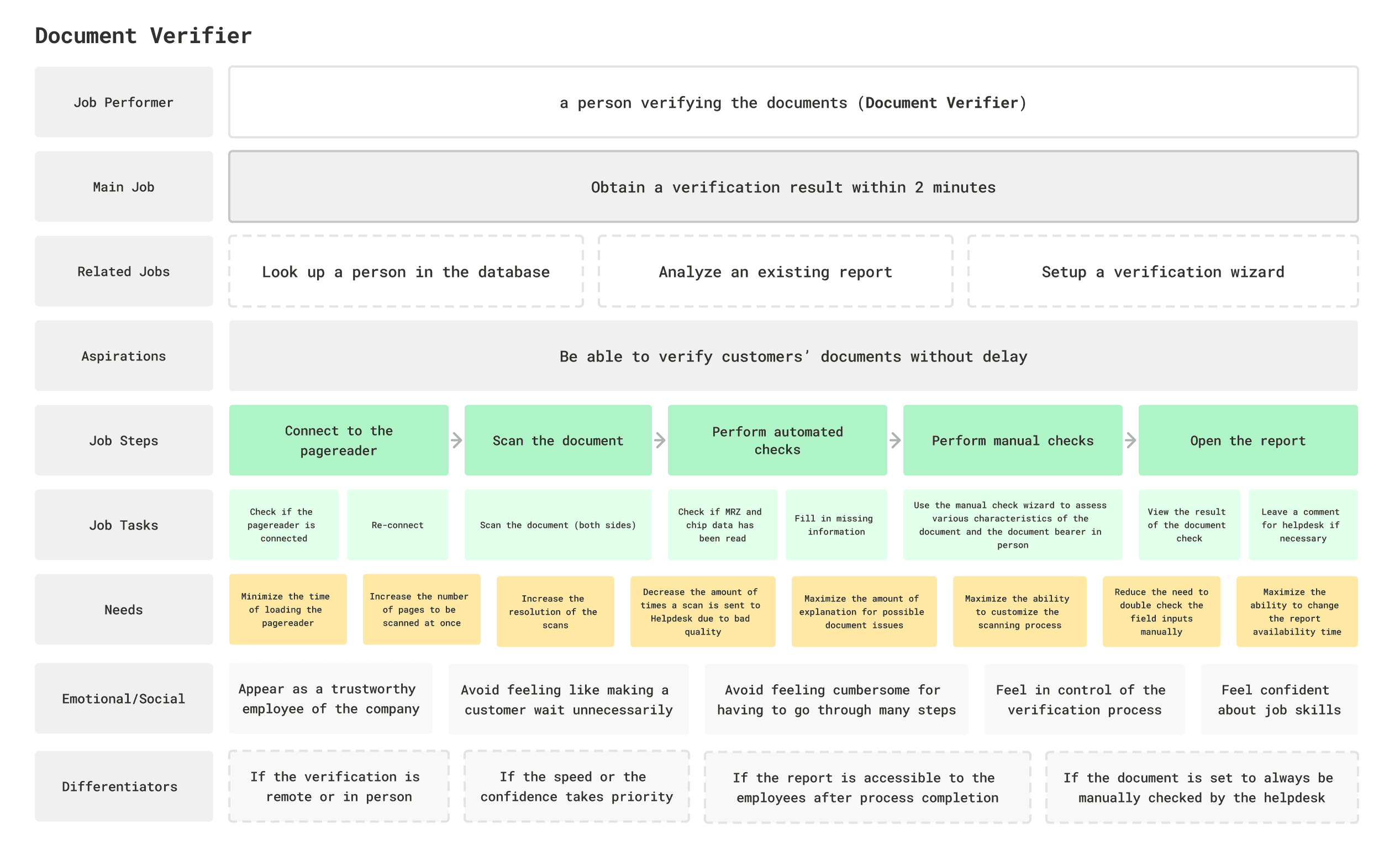

JBTD Canvas and Insights

After completing the interviews, I synthesized the findings into six Jobs-to-Be-Done (JBTD) canvases. For this case study, I will focus on one key Job: the “Obtain a verification result within 2 minutes”. Below is a sample of the filled-in canvas (note: the data provided here is illustrative and not the actual information used).

In total, 38 job needs were identified for this specific job. The needs statements were formulated during a workshop with both the product and development teams using the framework developed by Lance Bettencourt and Anthony Ulwick for desired outcome statements, which include: direction of change + unit of measure + object + clarifier. Similar needs were combined to avoid repetition.

After identifying the needs statements, I contacted the interviewees to conduct a survey to prioritize the most critical ones. Each statement was rated by the participants on two scales:

Importance – How essential the need is to the user.

Satisfaction – How well the current solution addresses that need.

For example:

Reduce the need to double check the inputs manually.

How important is this to you? (1 = very unimportant, 7 = very important)

How satisfied are you with the current solution? (1 = very unsatisfied, 7 = very satisfied)

These ratings enabled me to calculate an opportunity score by subtracting the satisfaction score from the importance score. The product team and I decided to focus on 11 needs with the score above 10.

Job Stories + Pain Points

As a final step in working with the JBTD canvas, I decided to combine Job Stories with Pain Points. For the 11 most important unmet needs, I created Job Stories and revisited the interview insights to find exact pain points that matched each story. I tagged each pain point with the corresponding Persona, creating a layered system that connects Job Performers to Personas.

This offers solution customization based on the specific challenges of different Personas.

JOB STORY

When I am scanning a document in-person and speed is the priority, I want the software to highlight potentially misread elements, so I can reduce the need to double-check input fields manually.

Interview Insights Related to Pain Points

“AuthDoc allows to manually edit the information but doesn’t check if it was edited correctly” (Marina)

“does not show which parts got read wrong, you have to find it manually” (Marina)

“sends every document with manual edits to Helpdesk, slows things down” (Rachel)

By combining these persona-specific pain points with the Job Story, the solution tackles the main task while also adapting to the unique needs of each Persona.

Benchmarking

TASK FORMAT

USER GOAL

Obtain a verification result

CONTEXT

In-person verification

RELEVANT DETAILS

Aiming to obtain a verification report within 2 minutes

STARTING POINT

Main page

END POINT

PDF report

CUT-OFF TIME

5 minutes

TASK SCENARIO

You are looking to obtain a verification report for a customer’s international document to verify their right to work.

The second part of my research focuses on establishing concrete benchmarks for the current platform's performance, allowing us to track whether the UX changes in the new version will genuinely enhance the user experience. The objective is to assess the effectiveness, efficiency, and satisfaction of the most critical tasks within AuthDoc. Based on stakeholder interviews, I selected the following metrics: Completion Time, Task Success, Task Severity, Single Ease Question (SEQ), and UMUX-Lite.

I conducted 20% of the tests remotely and 80% in person, as a portion of the testing required interaction with a physical object (a page reader/scanner). However, a limitation was that all in-person participants were based in a single country, while the company serves an international market.

This is an example task; similar scenarios were developed for various user goals aligned with the previously identified jobs.

To analyze the results, I created a stoplight chart.

Note: this is a sample and not the exact information used.

I gathered the metrics for each of the six User Goals aligned with the six JBTD Canvases. These benchmarks will support ongoing evaluations to track enhancements in user experience over time.

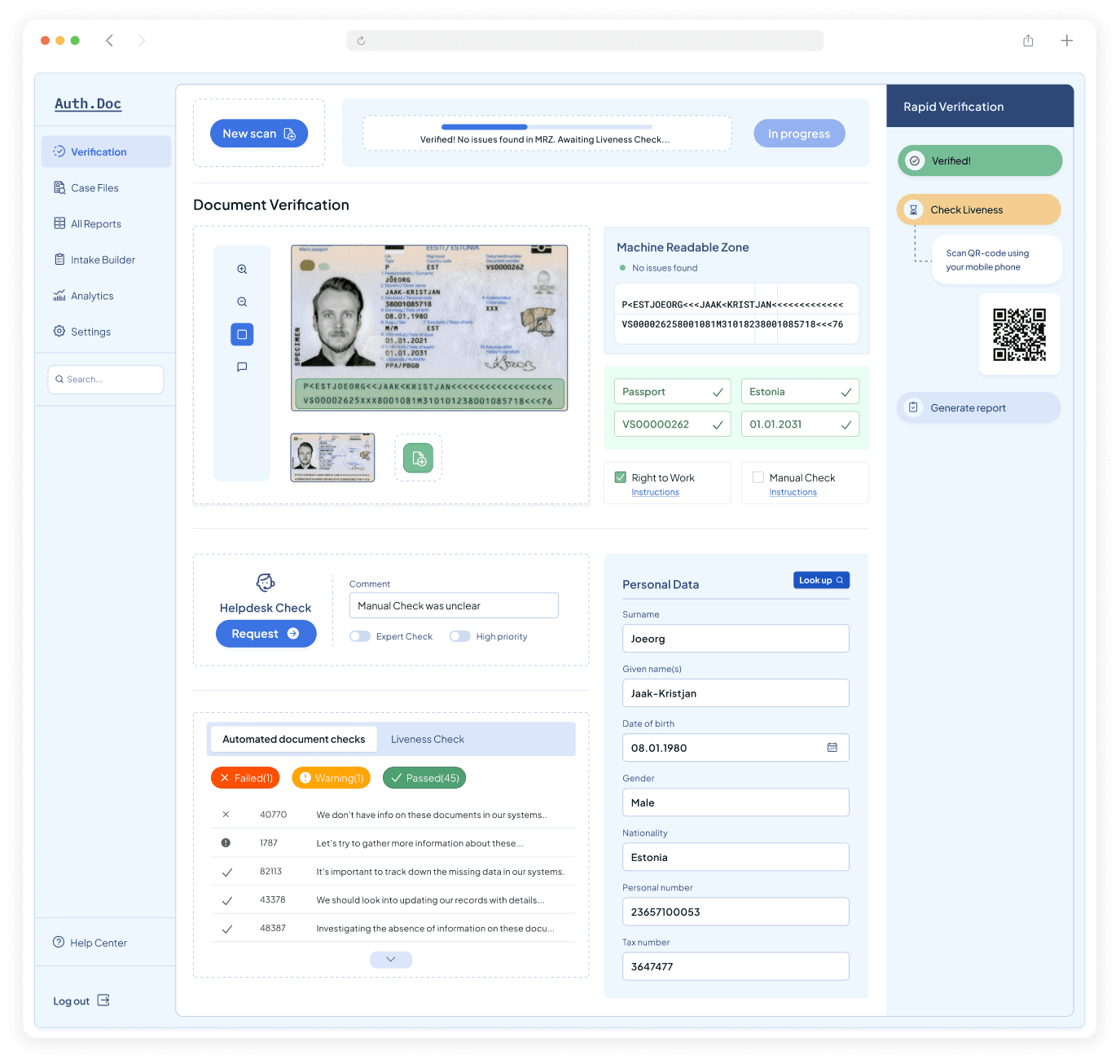

Solution Design

I used the Job Stories to inform and guide the solution design, including the creation of How Might We statements. For the purpose of this case study, I will focus on the “Obtain the verification result within 2 minutes” JBTD and illustrate the design decisions that stemmed from it and its subsequent Job Stories. At this stage of the project, I used low-fidelity wireframes to prototype and test the solutions.

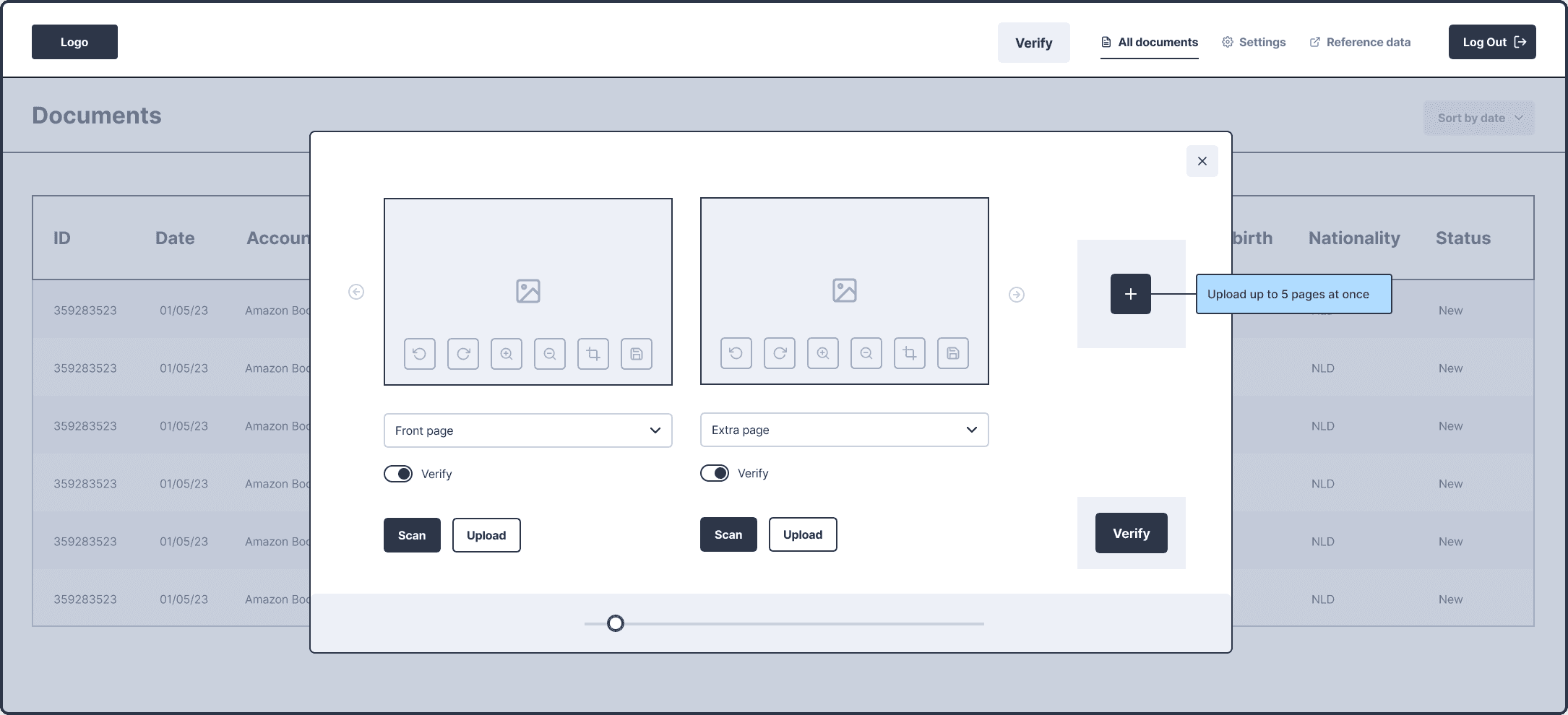

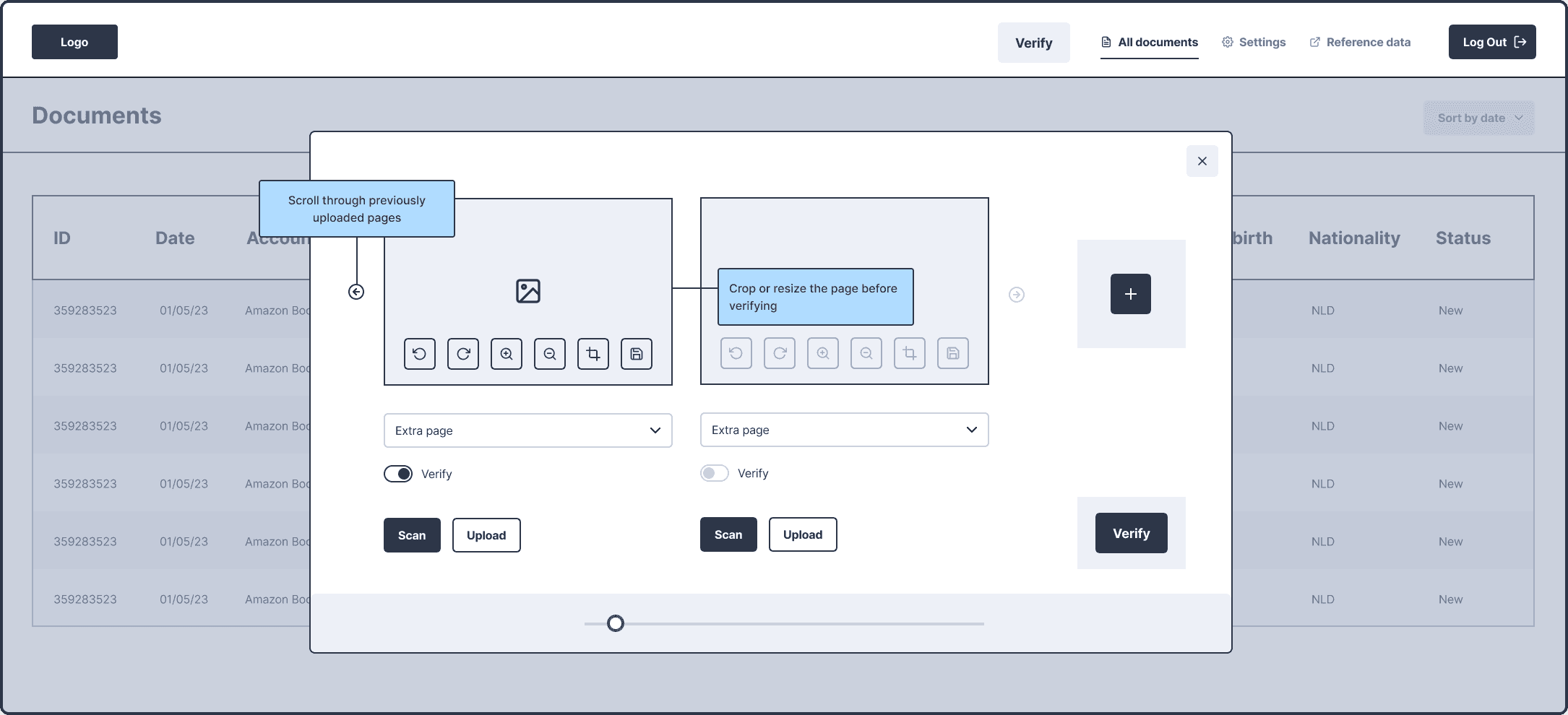

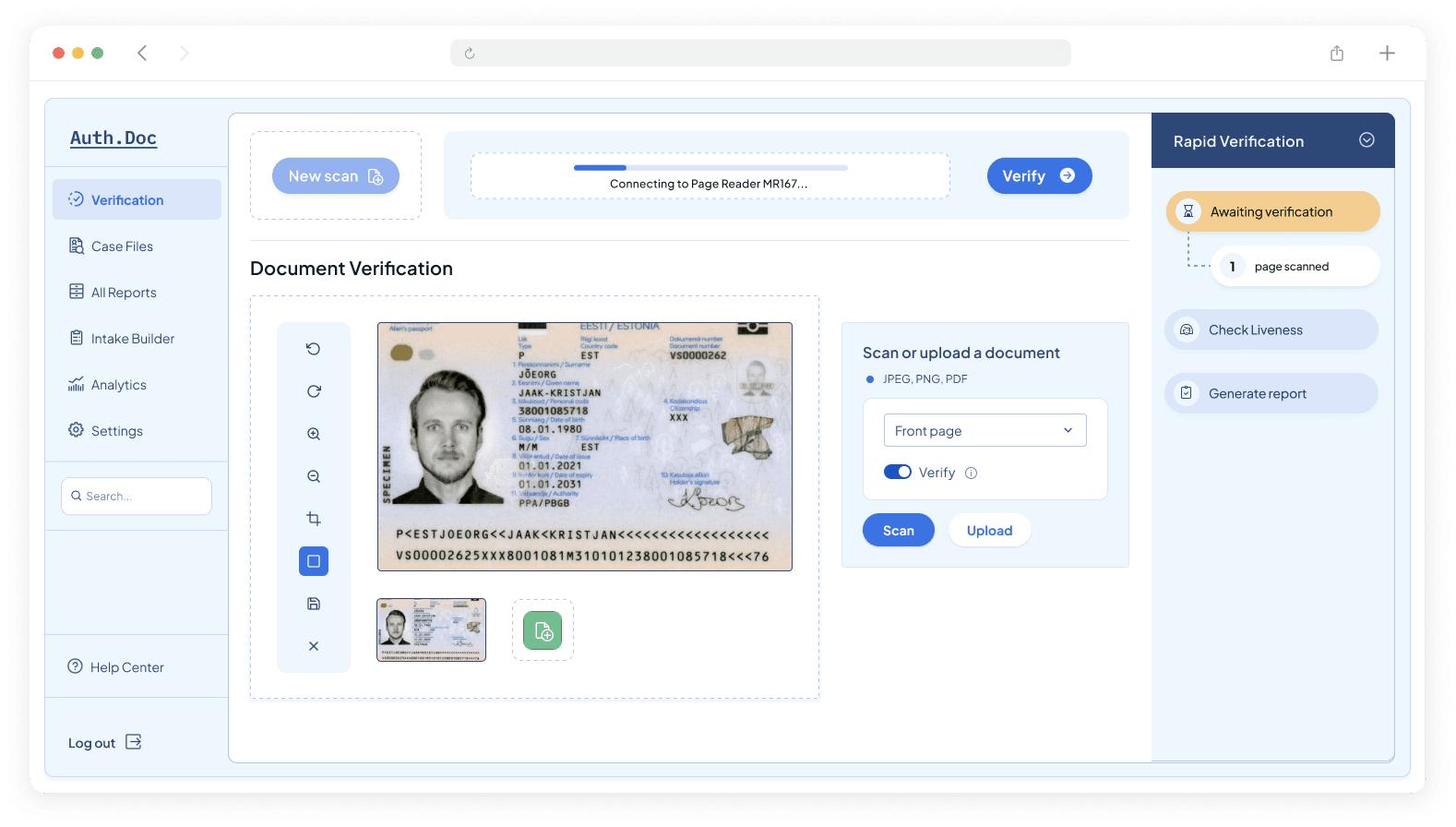

How might we enable users to scan multiple types of documents seamlessly?

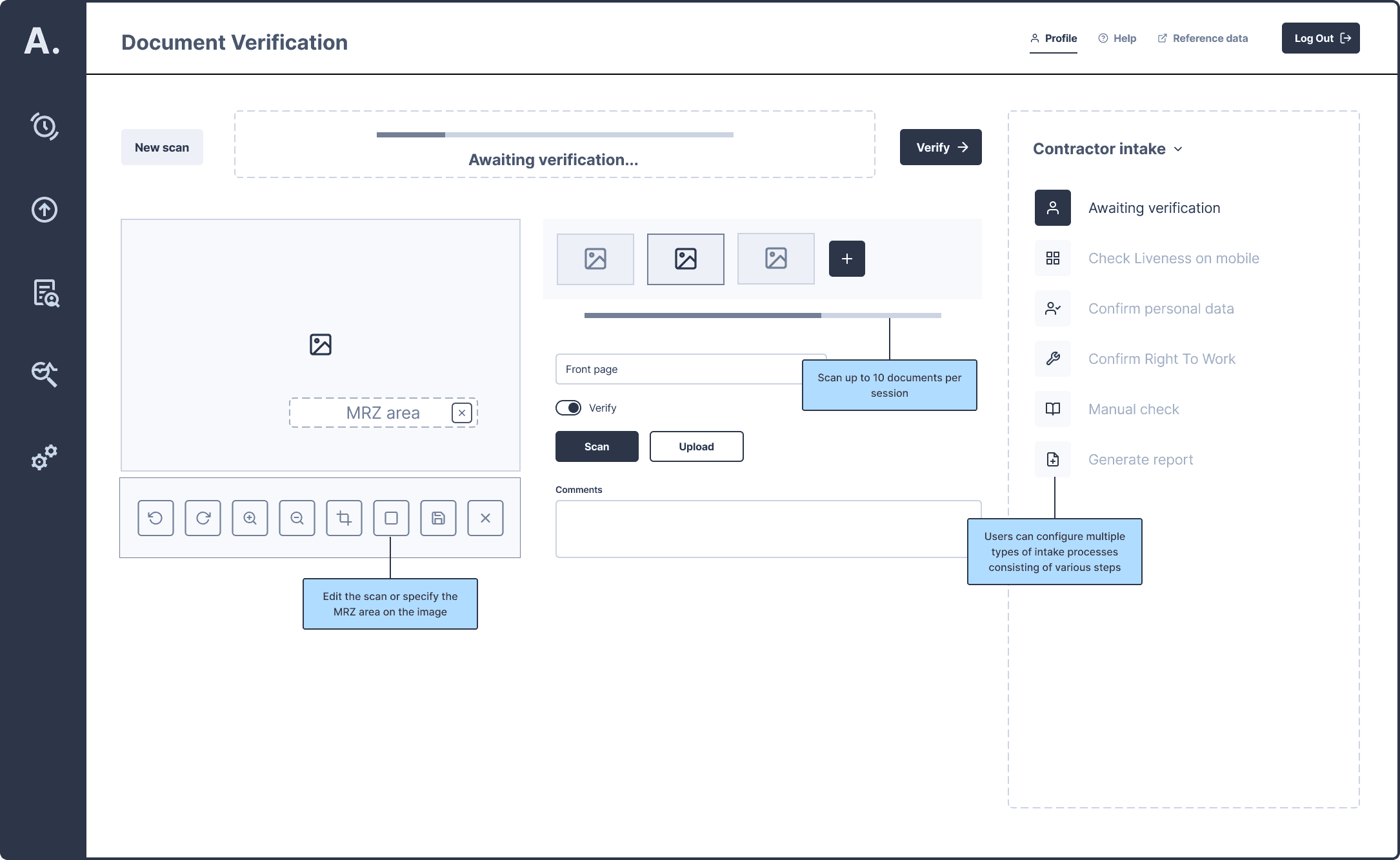

The first iteration of the document scanning page used a familiar to the users step-by-step wizard design. I added an option to upload up to ten pages, select the type of each page, and a checkbox to mark whether a page should be verified or simply uploaded as part of the document bundle.

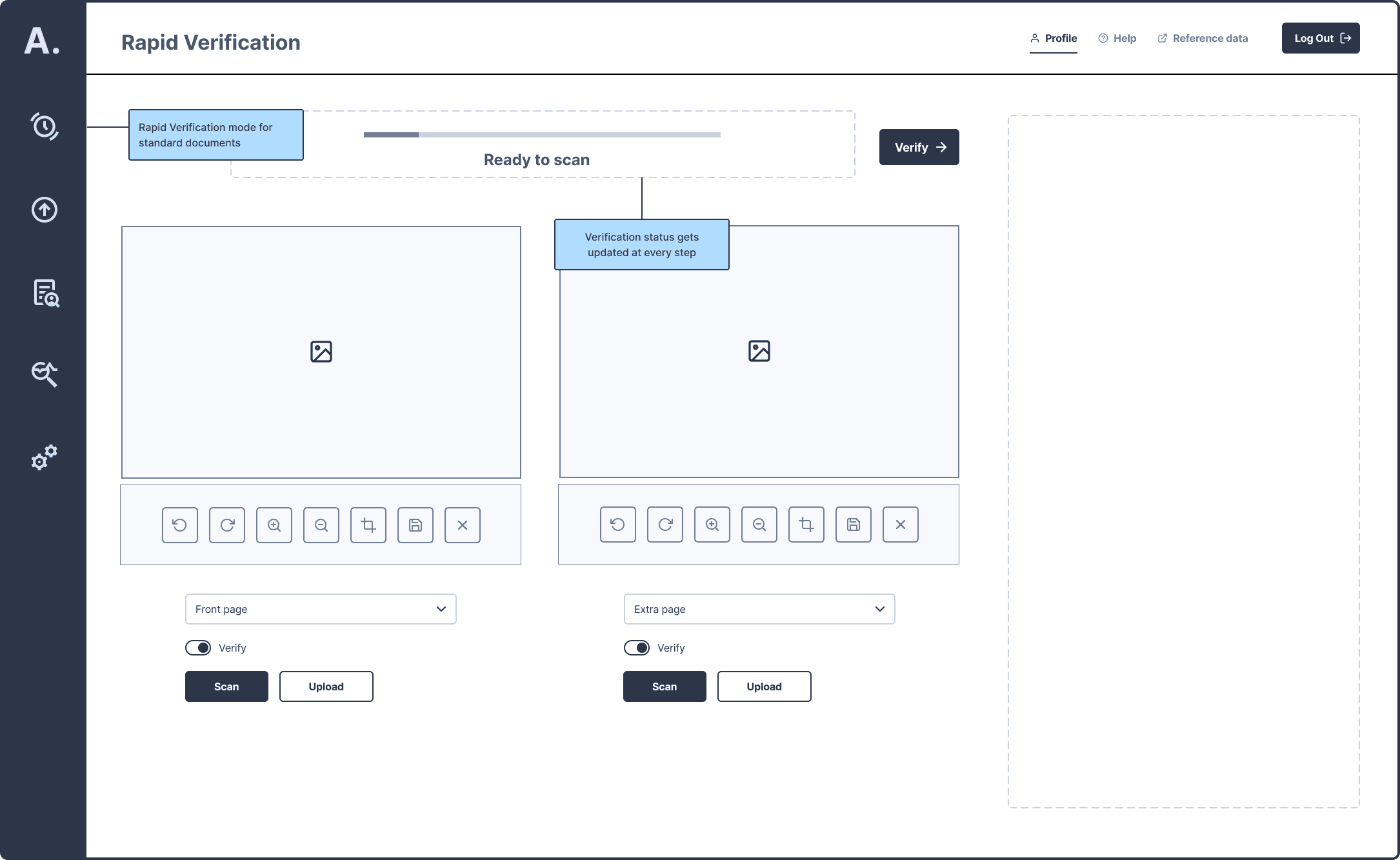

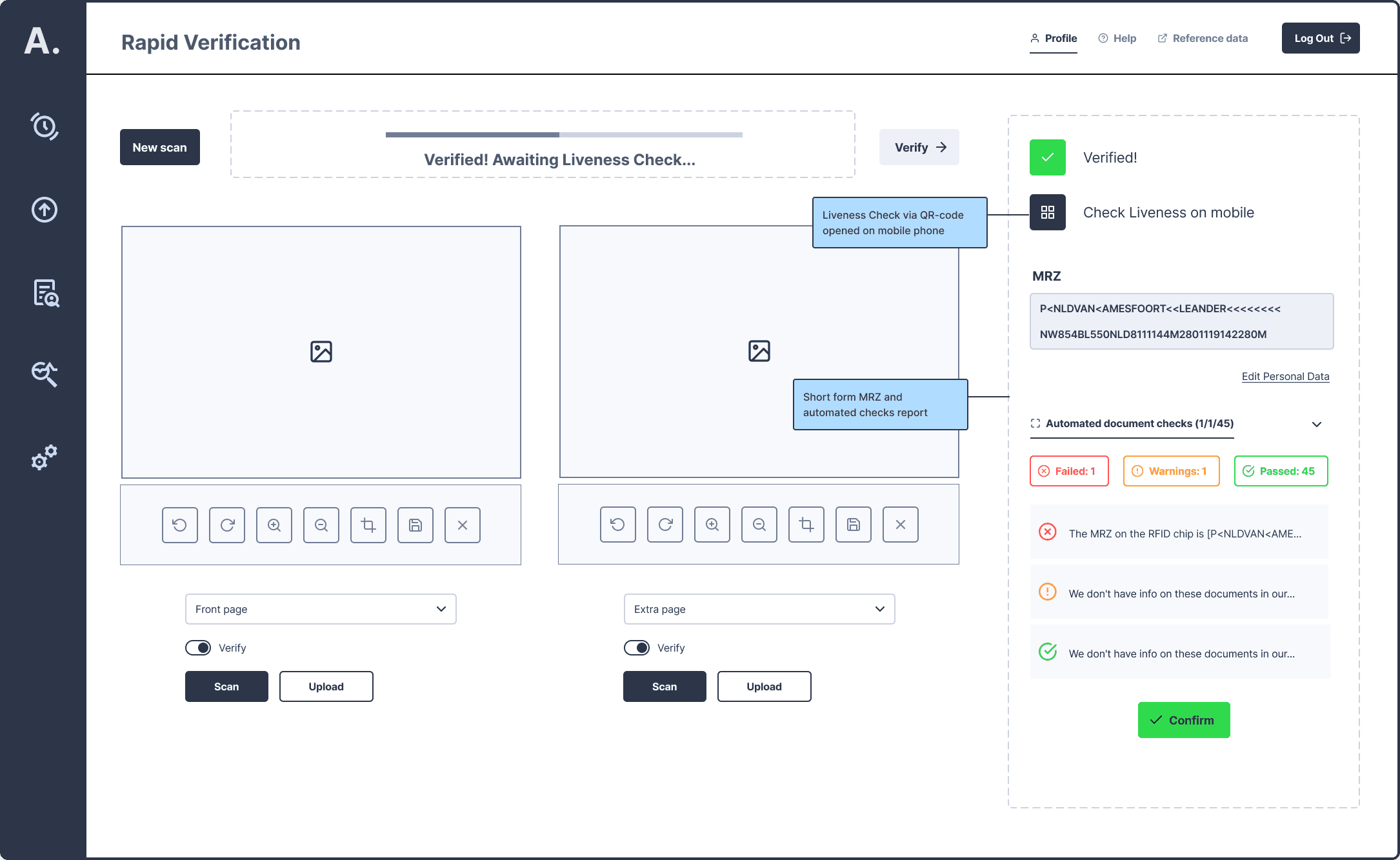

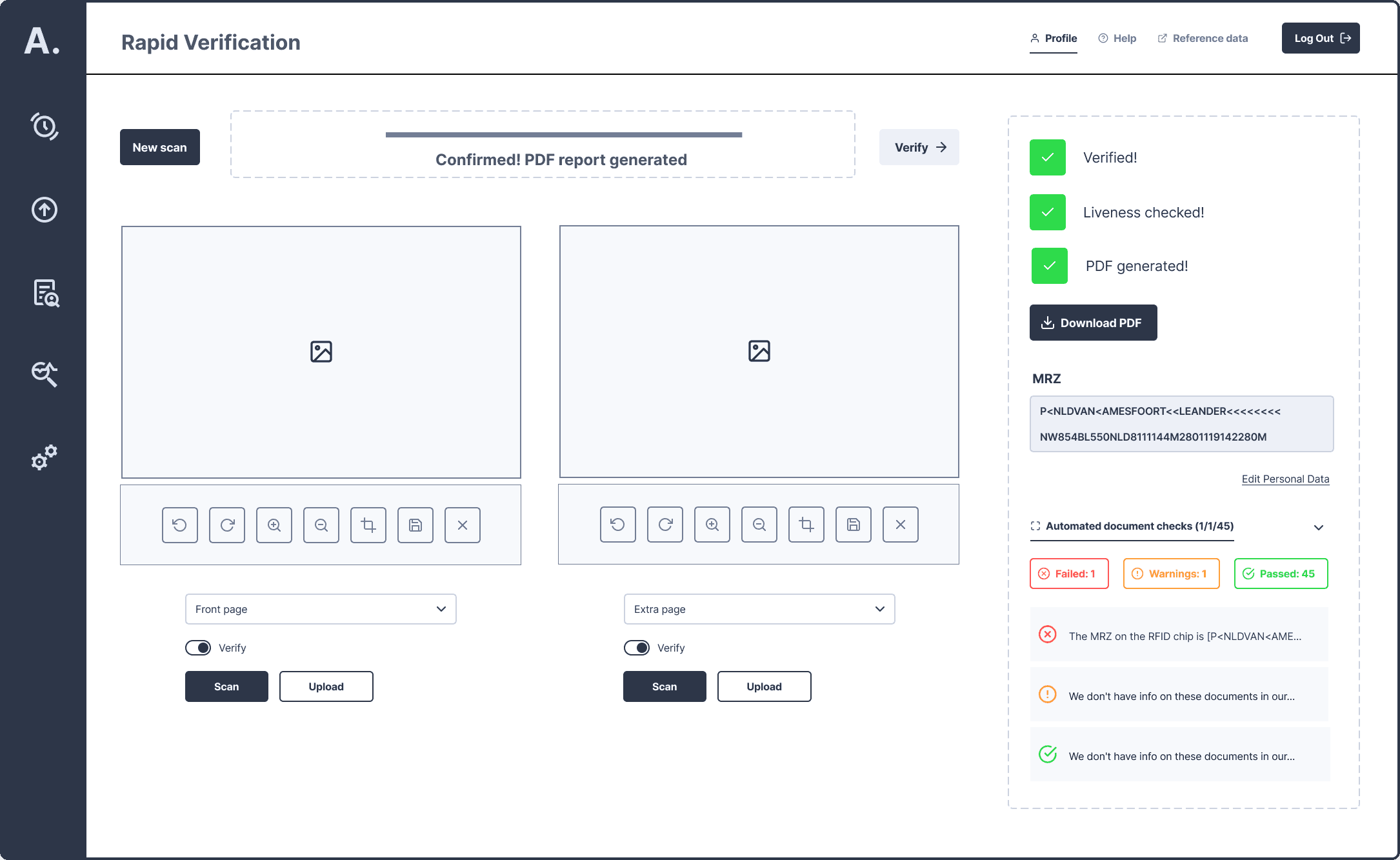

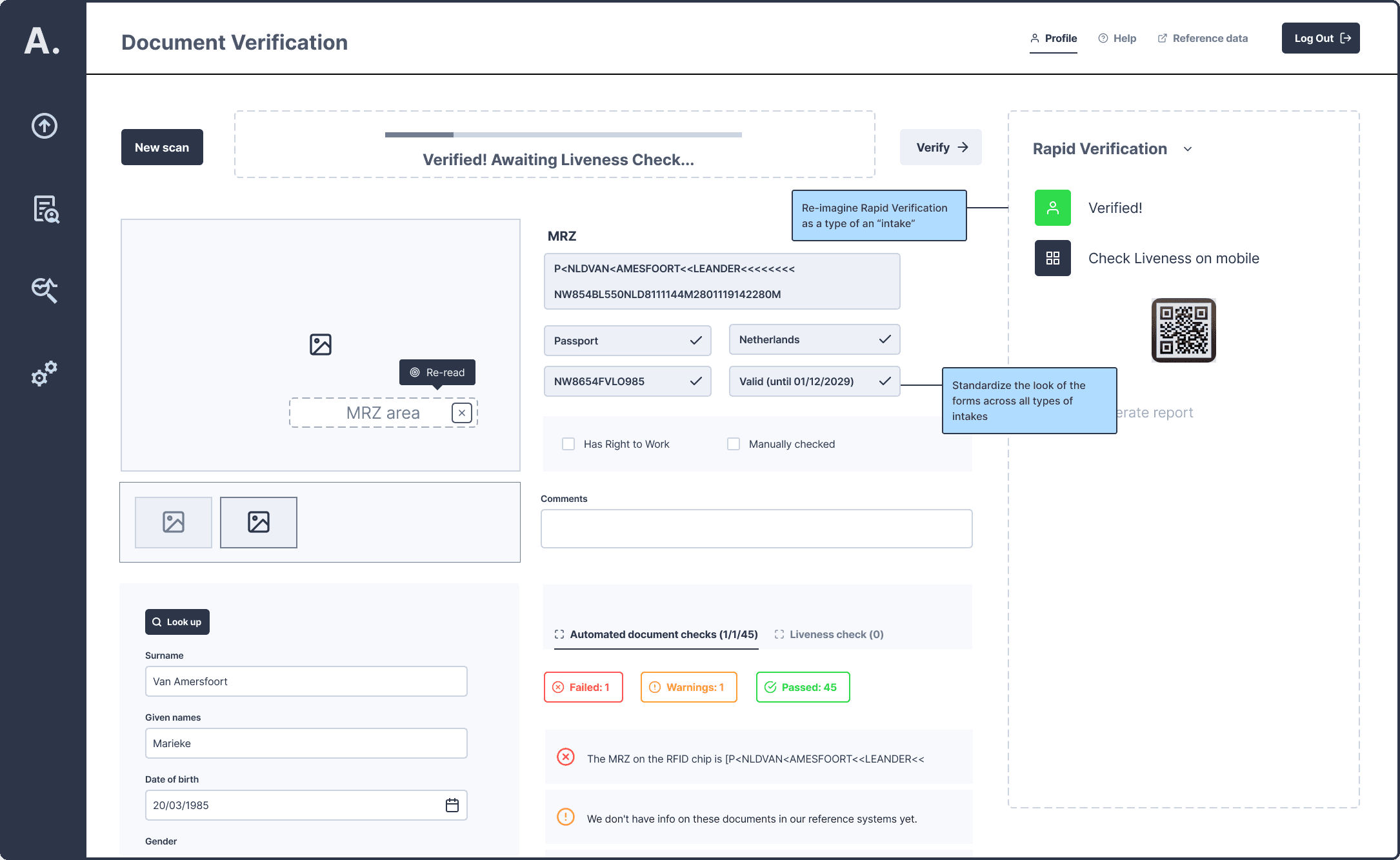

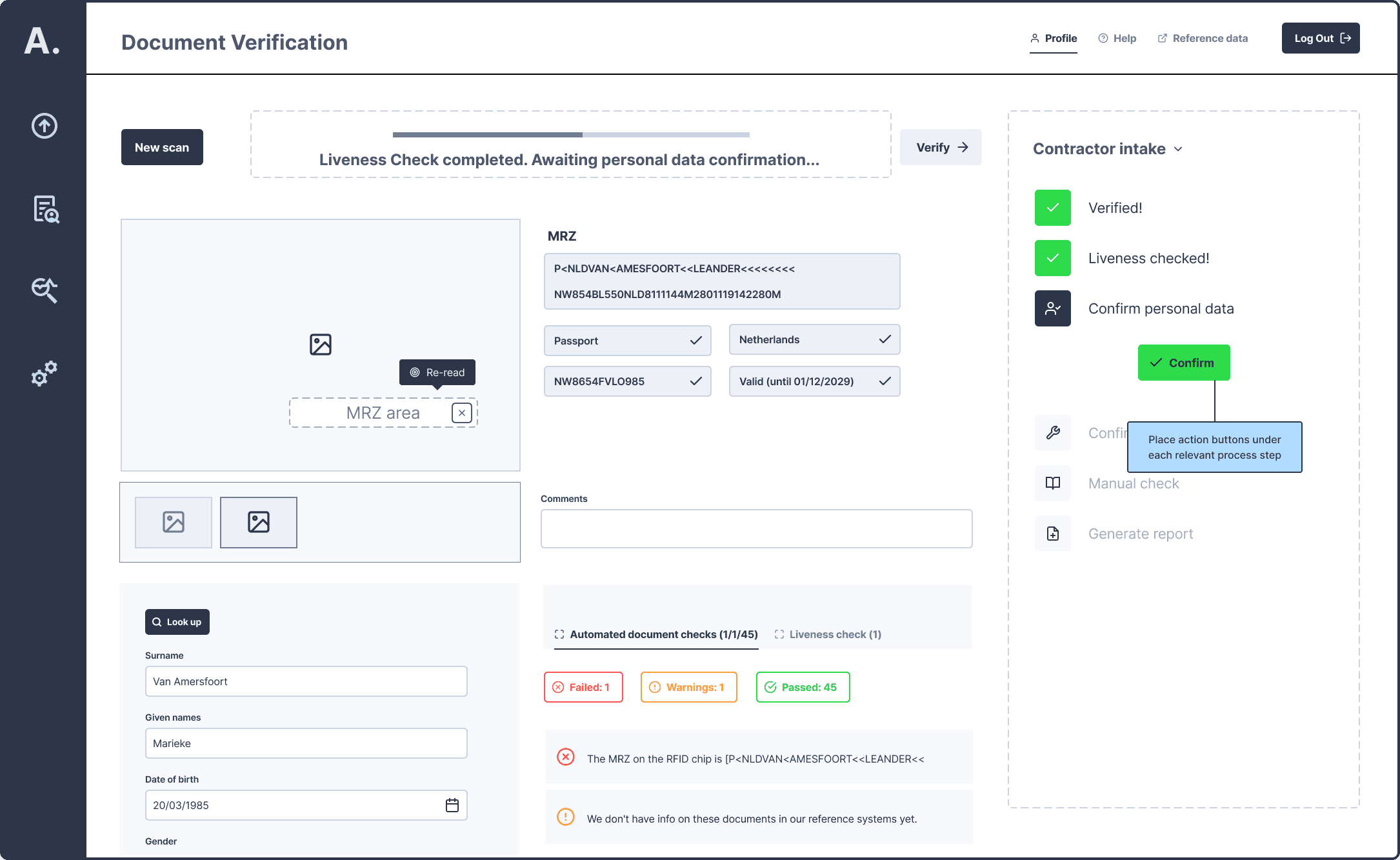

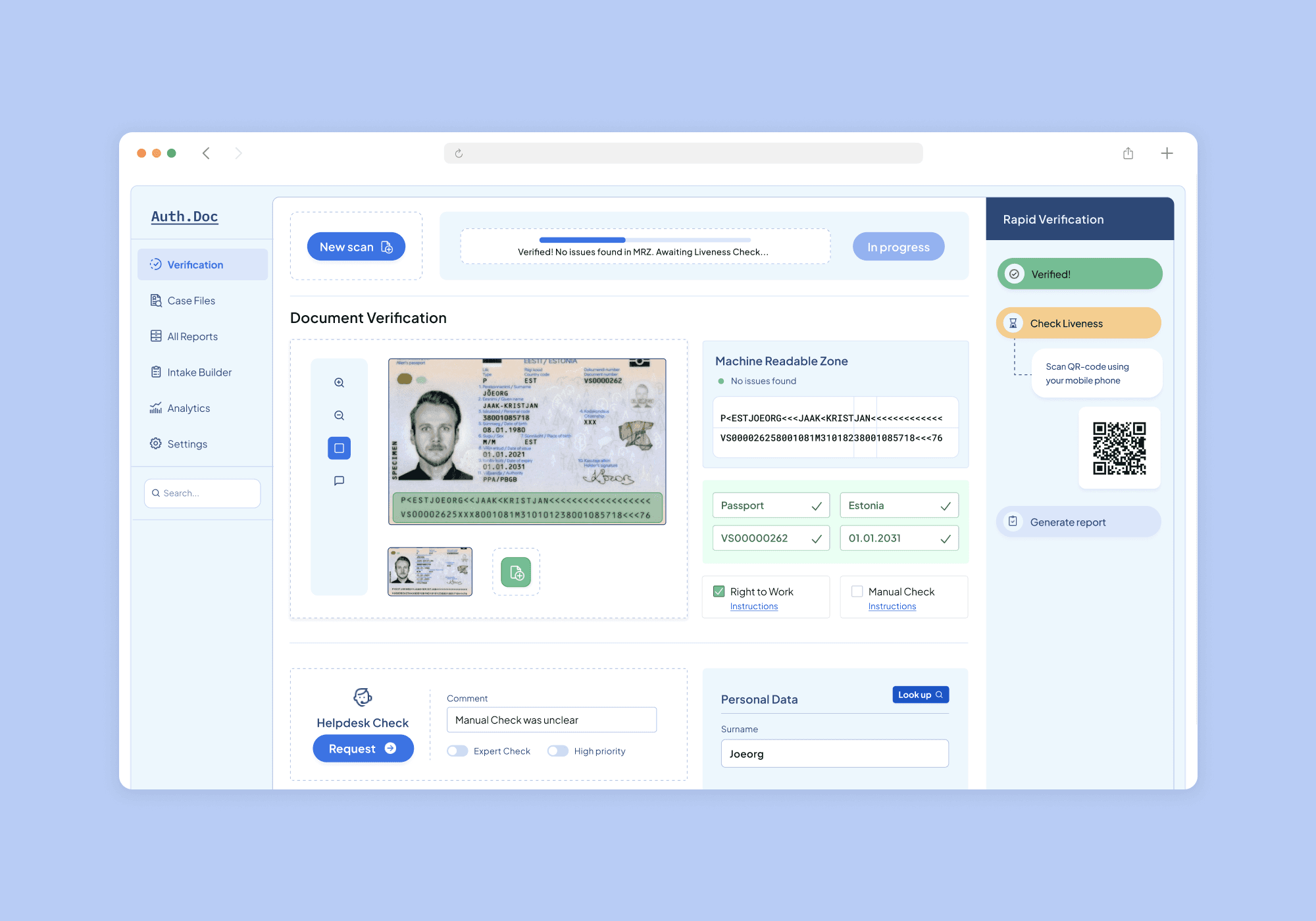

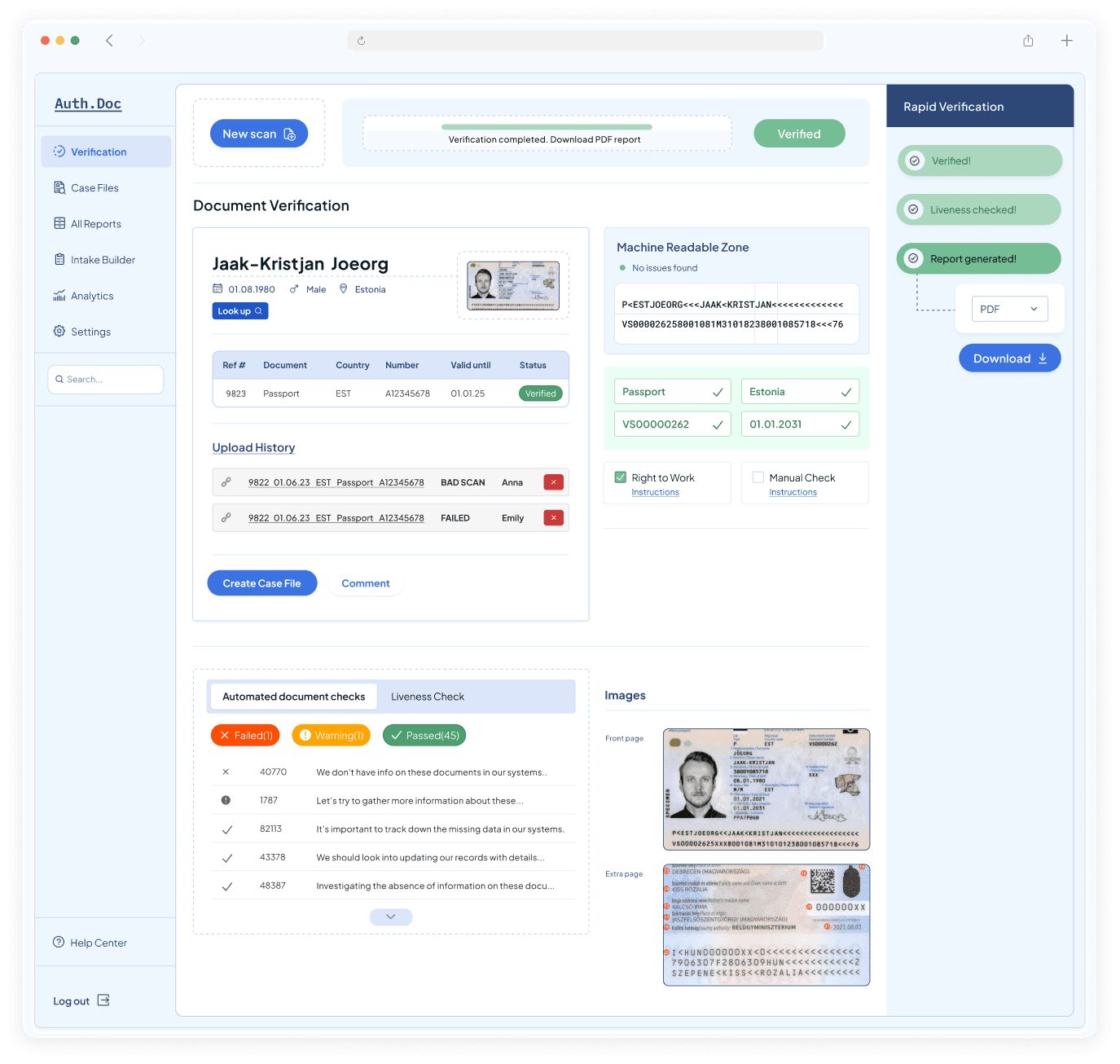

The concept for the second design iteration was driven by two user needs that emerged as top priorities in our survey. Users required both a quick, repetitive verification process (suited to performance-oriented users) and a more thorough, customizable process (preferred by risk-averse users). One solution we explored was to address these needs by creating separate modules for each type of verification.

The complete verification process allowed users to create customized "intake procedures" by selecting and combining different authentication modules enabled for their account.

The Rapid Verification solution initially raised some concerns among stakeholders. To address these, I conducted 10 qualitative concept tests using low-fidelity Figma prototypes. During testing, about 70% of participants struggled to distinguish when to use full verification versus rapid verification. In response to the feedback, I created a merged version of the design that combined both verification modules into a single layout and tested it using a 5-second test. The primary elements participants recalled were the “Intake” section and the multi-page scanning area, meeting our expectations for clarity and focus.

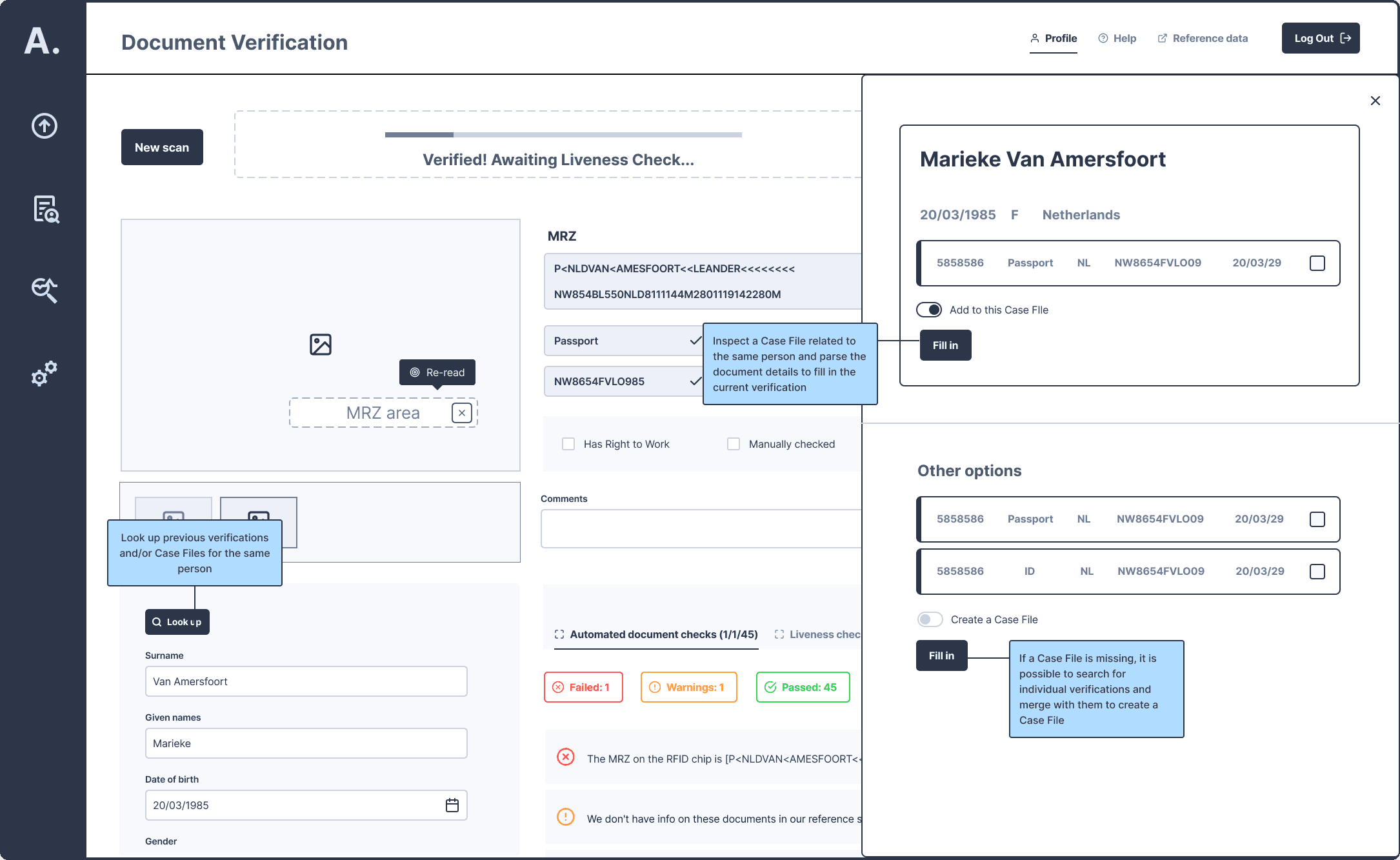

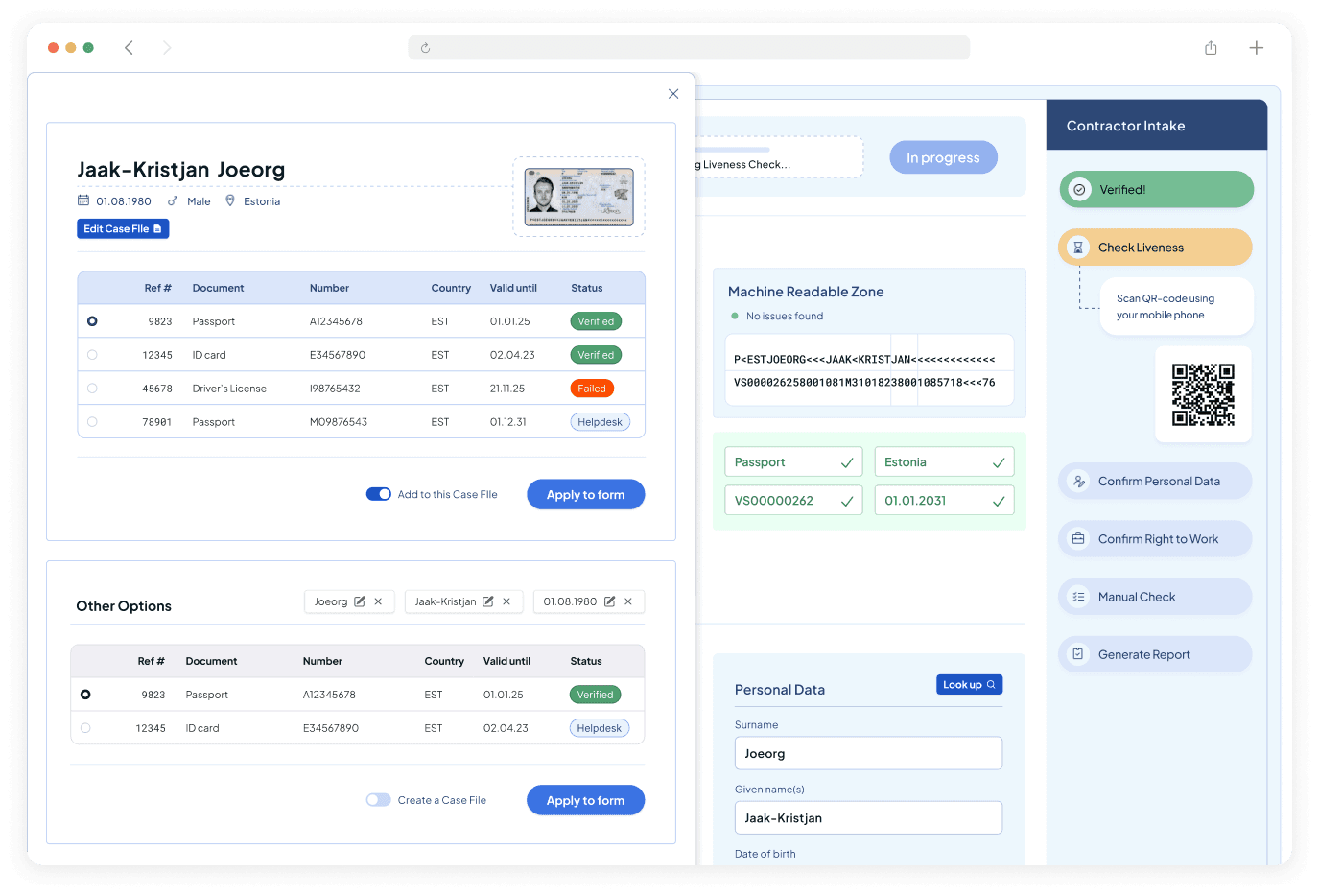

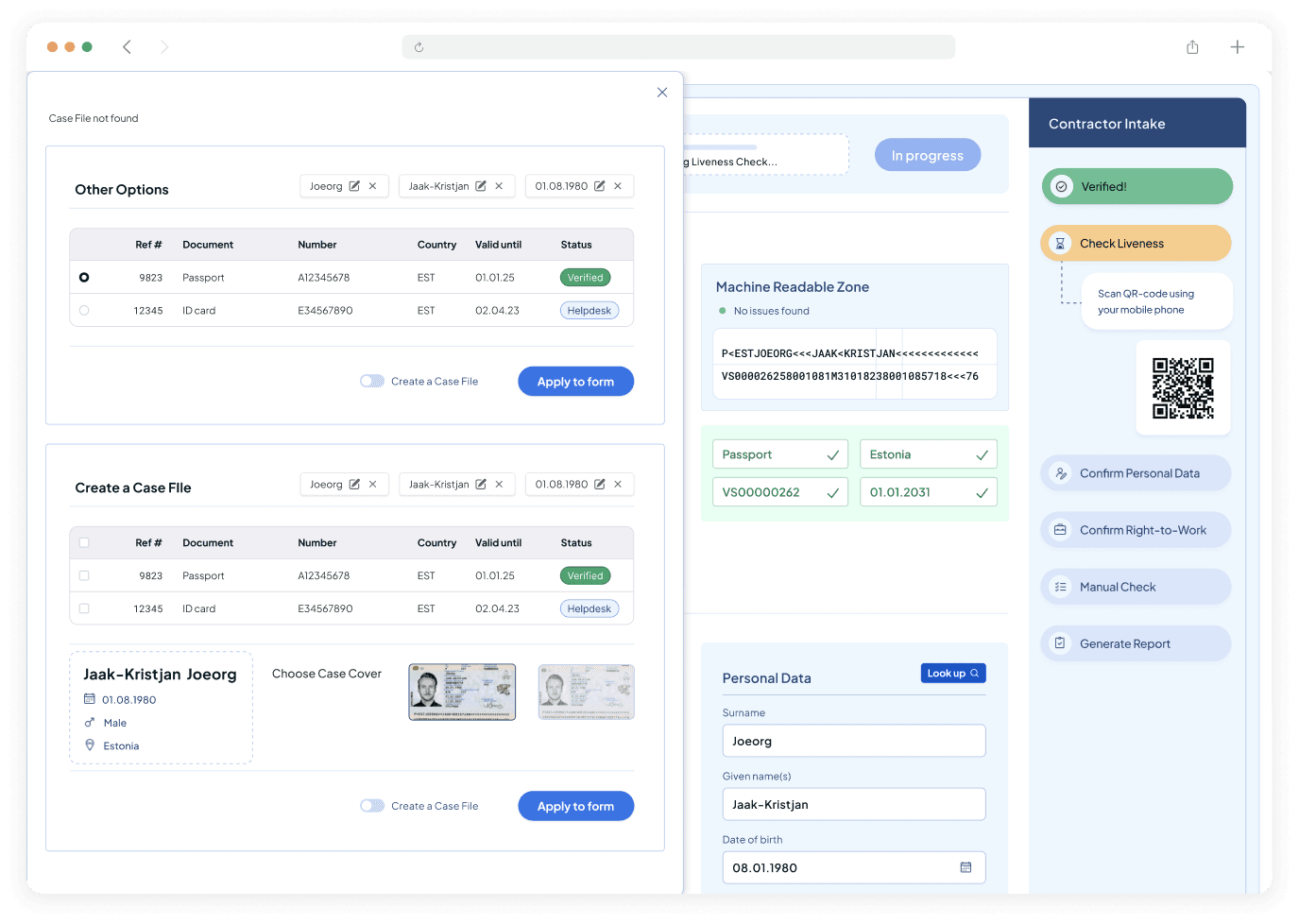

How might we allow users to easily access the scan history for the same individual?

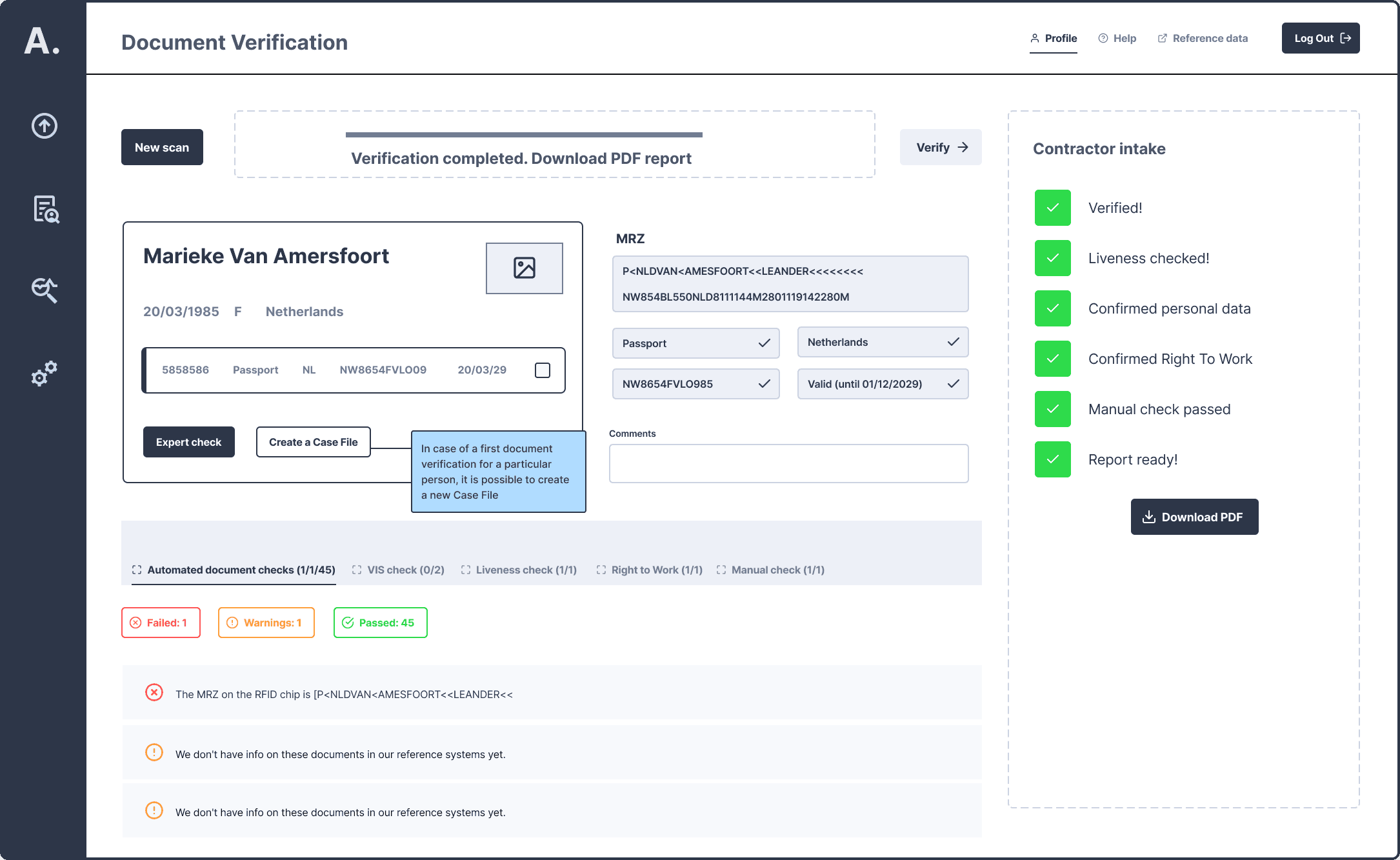

During the research phase, I discovered that most users desired some level of access to previously scanned documents. In response, I proposed a “Case Files” solution — a categorized collection of documents linked to the same individual and organized by document type. Users could choose to either group all relevant documents into Case Files or select specific ones for storage, with the remaining files accessible in a shared database. Additionally, users could pull information from Case Files during batch verification to minimize potential OCR errors.

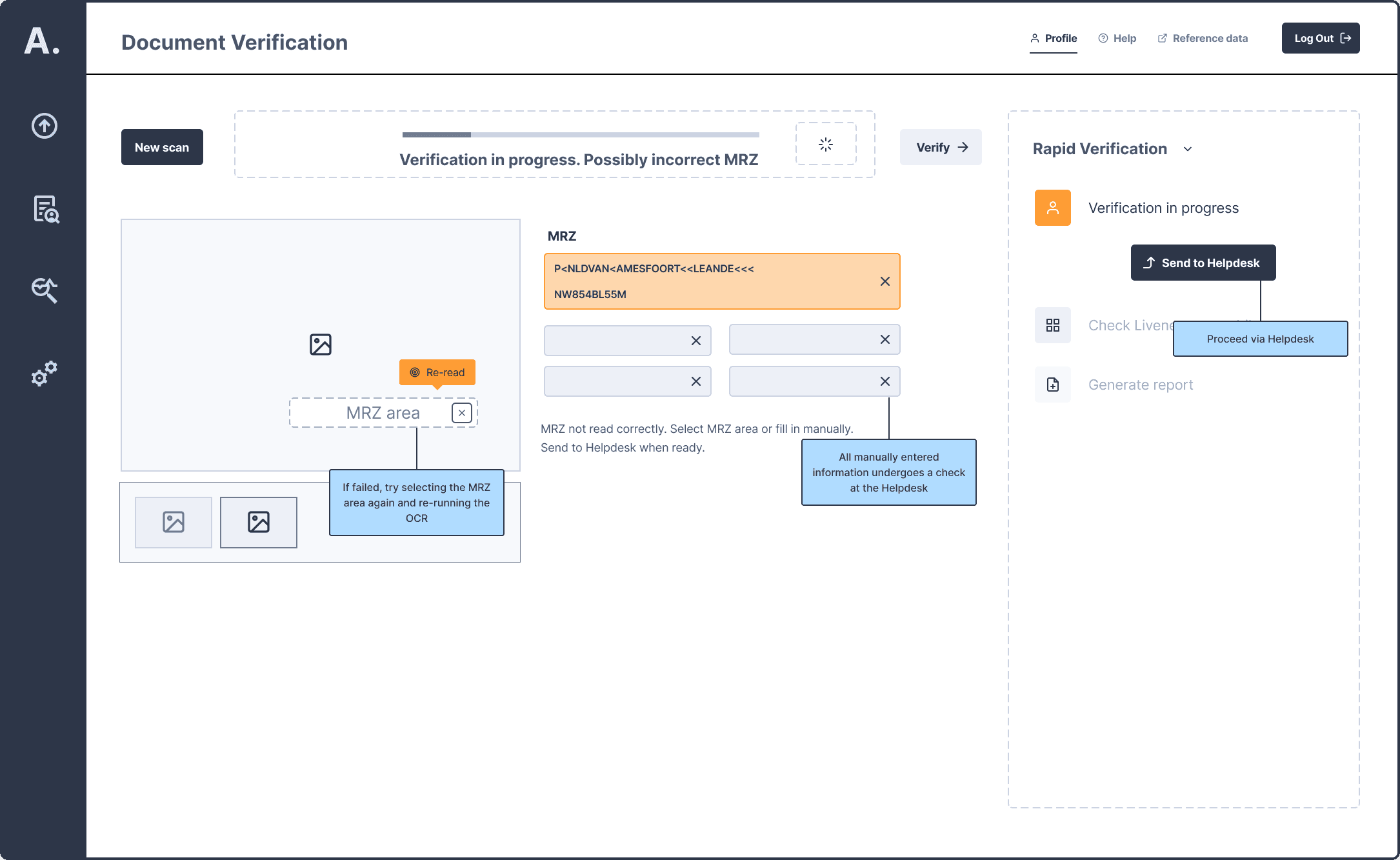

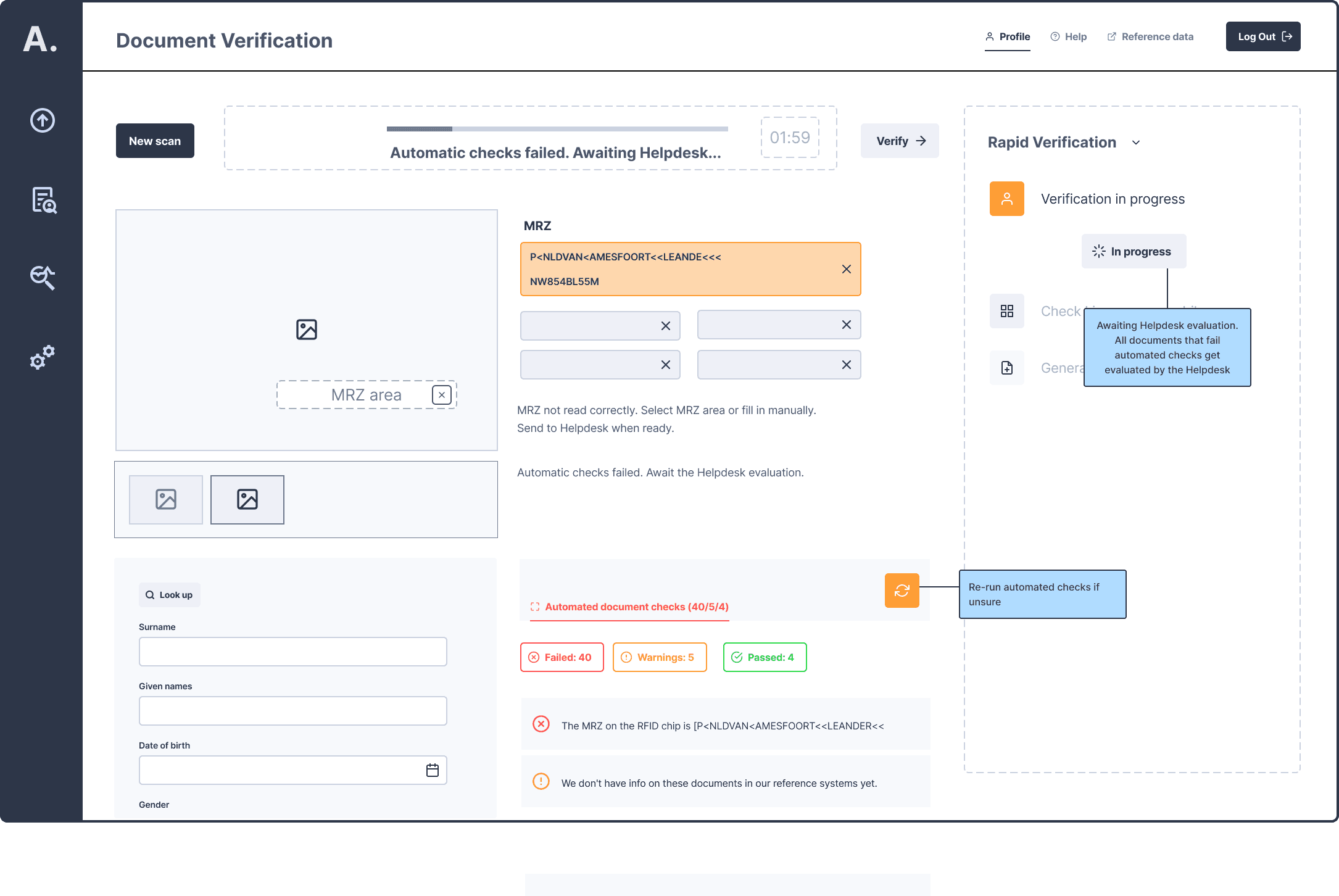

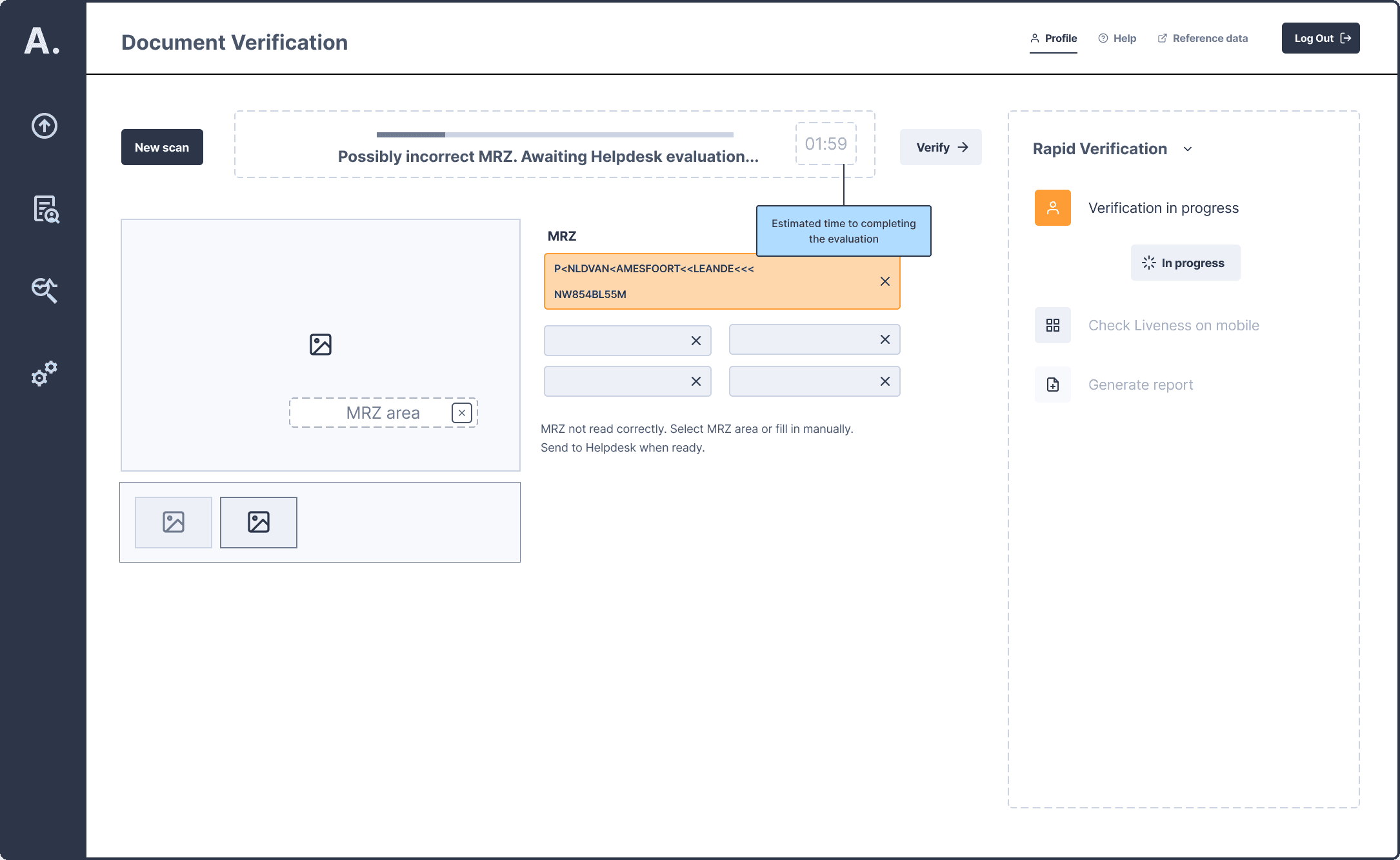

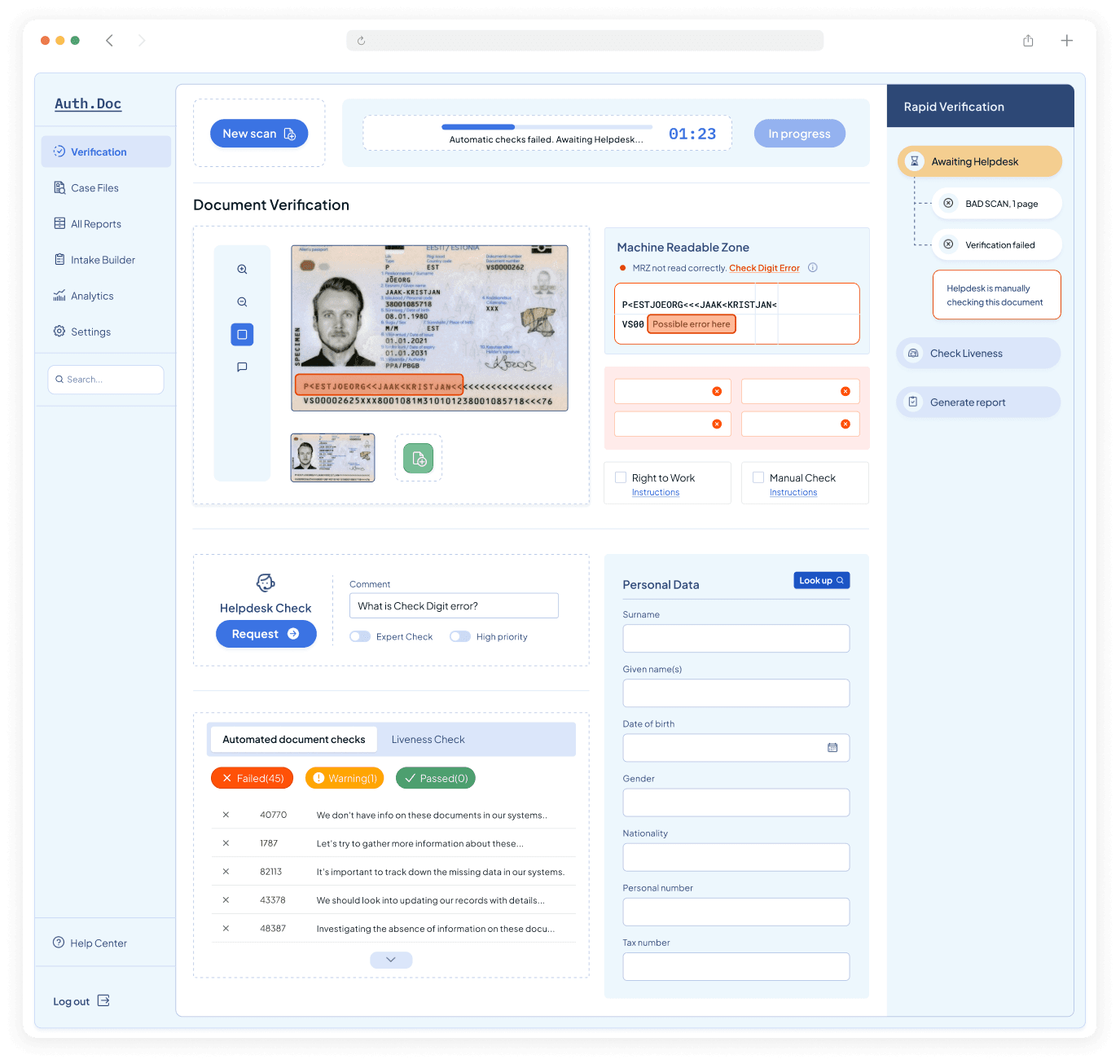

How might we effectively communicate the need for a Helpdesk check to the user?

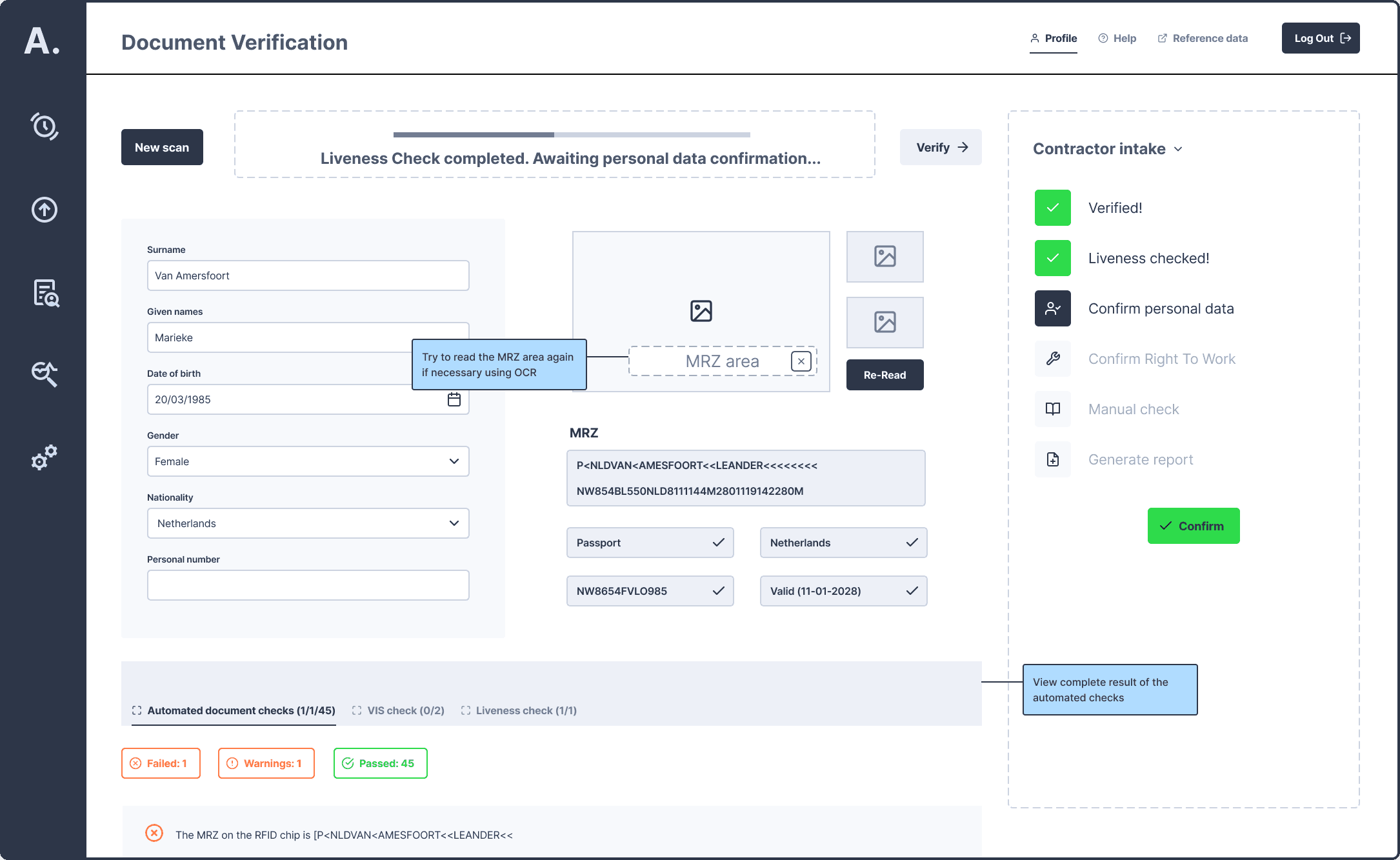

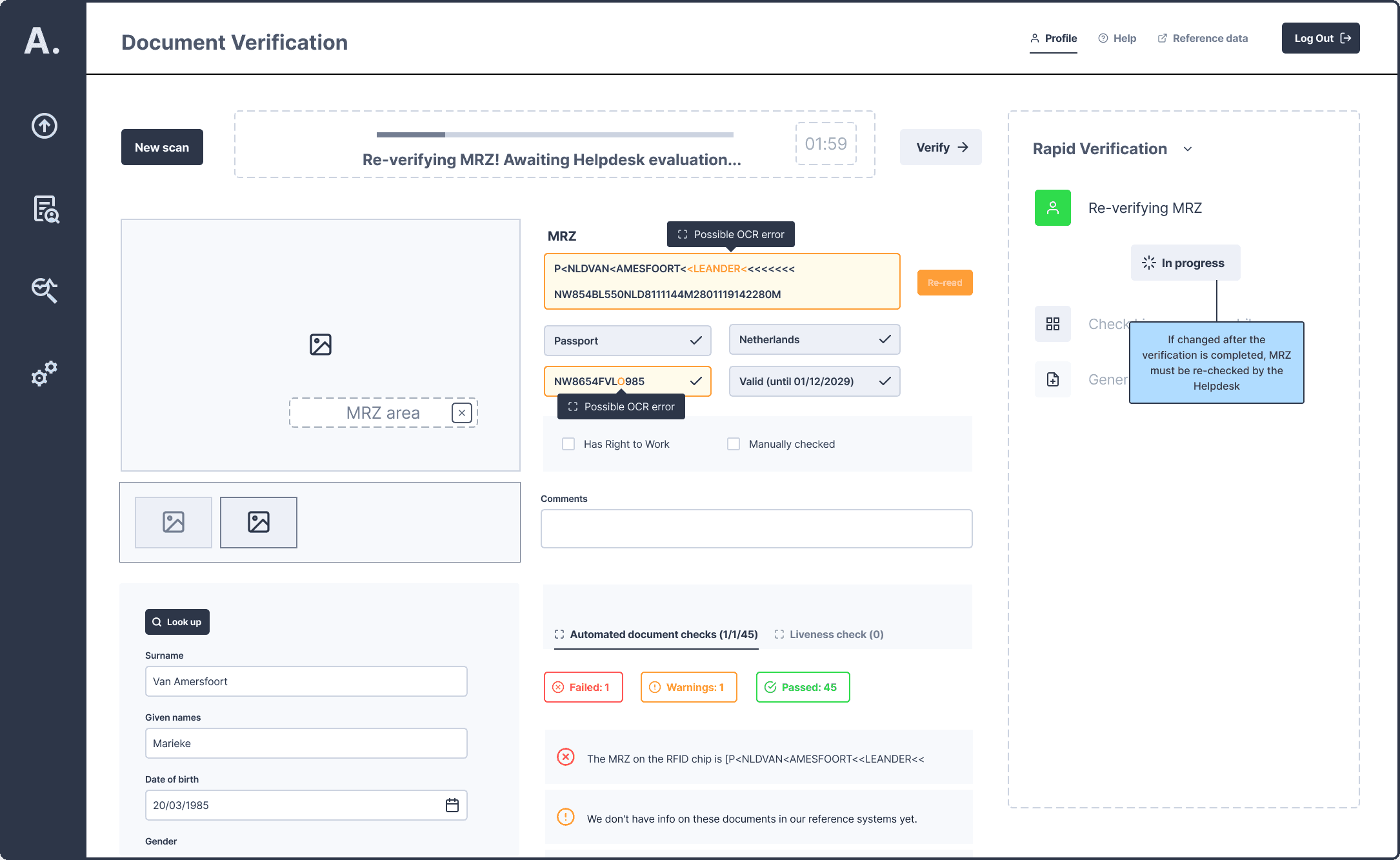

The most common case, in which an automatic verification stumbles is when the OCR (optical character recognition) module does not read the MRZ (machine readable area) correctly. In that case, the user gets clear instructions on how to try and read it again or to enter it manually and await the Helpdesk evaluation.

When automatic checks fail, the documents are forwarded to Helpdesk for further evaluation, and the user is given clear instructions on next steps.

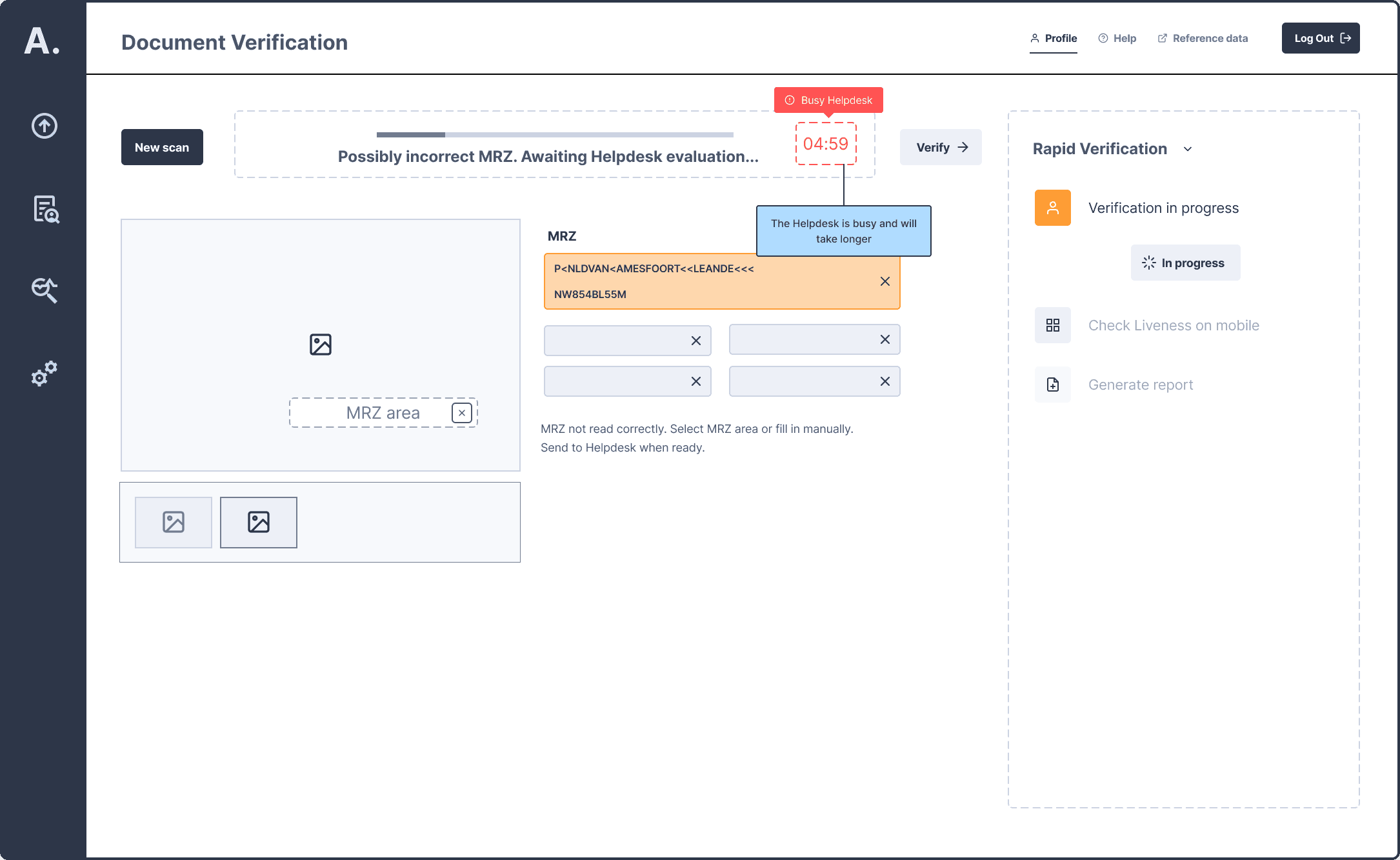

How might we clearly indicate the remaining time in the verification process?

A timer displays the estimated time remaining until a response from the Helpdesk, adjusting dynamically based on Helpdesk availability.

How might we assist users in correcting OCR errors before finalizing the verification?

Research revealed two common MRZ errors: the MRZ was sometimes inadvertently altered after verification, or it contained one or two characters that passed verification but were incorrectly read. To prevent these issues, I proposed a solution to flag potentially incorrect characters during the post-verification stage. This allows users to review and correct any errors to submit for final Helpdesk confirmation.

Final Designs

After several rounds of ideation and usability validation using the RITE (Rapid Iterative Testing and Evaluation) framework, I finalized the designs.

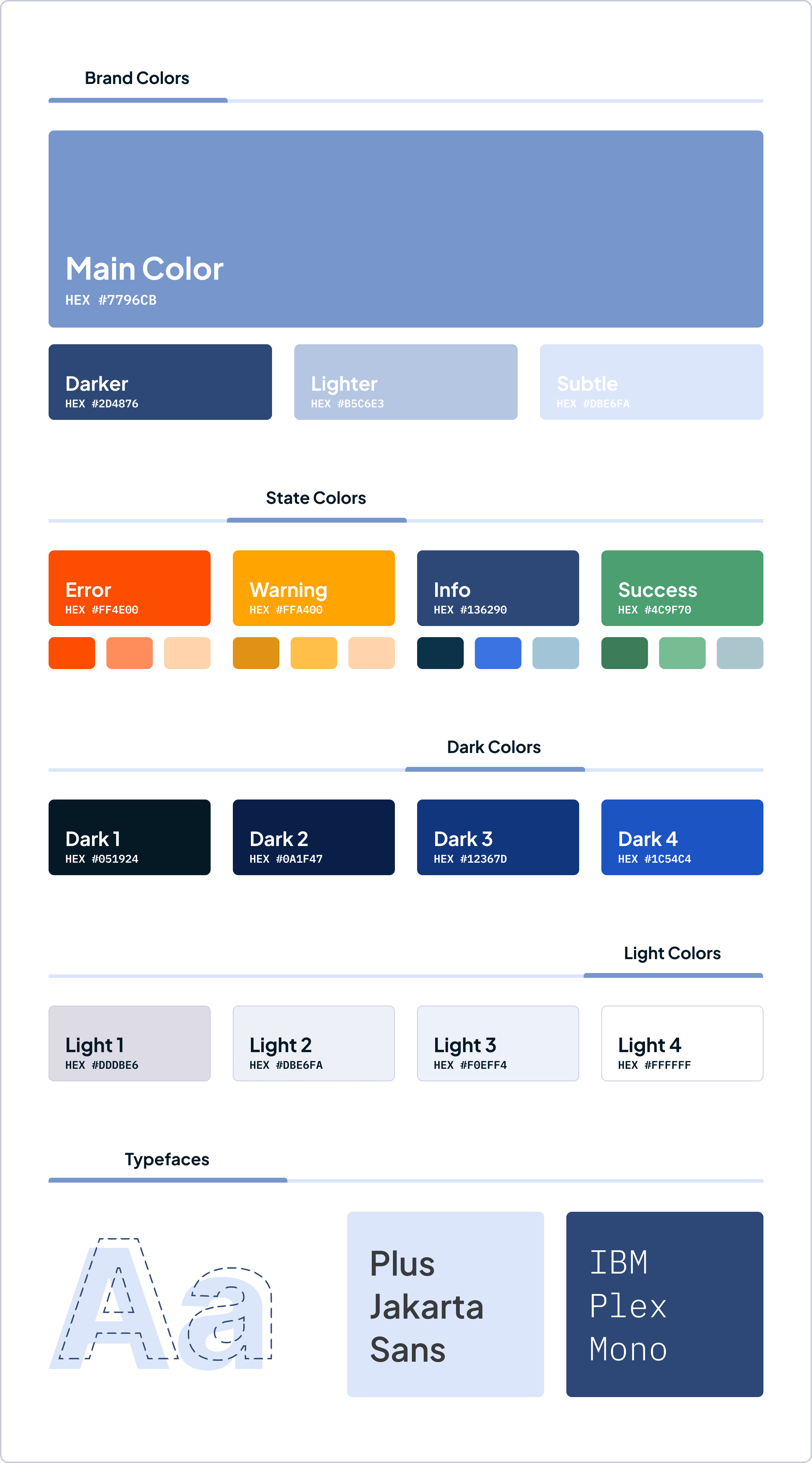

I applied the custom UI style guide I created for Blazor components, ensuring consistency and alignment with AuthDoc’s branding and development standards.

Results

In beta testing, we saw the average task completion time drop by a 35%. This allows users to move through verification workflows much more efficiently, especially those who prioritize speed. Additionally, task success rates increased by 28%, and the SEQ score rose by 23%. New features like "Case Files" reduced errors and Helpdesk needs. The updated platform reached 92% adoption, with users citing improved speed and usability. Average UMUX score also improved by 20%.

Reflection

Allowing Extra Time for the Research Phase: Given the coordination required in B2B settings, interview planning often extended timelines. We underestimated the time needed for the research phase due to the coordination required in the B2B setting. Allocating additional time for user and stakeholder interviews would have ensured a more representative sample.

Limitations of Low-Fidelity Wireframe Testing: Testing with low-fidelity wireframes provided initial insights into user flows, but didn’t capture the nuances of complex edge cases and unexpected behaviors within the application. This highlighted the necessity of conducting testing in a production environment to gather more comprehensive feedback.

Get in touch

Available for new opportunities in the Netherlands or remotely starting in 2025.

Say Hello

© Copyright 2025 Natalia Golova - All Rights Reserved